Capacity Assessment

Introduction

The Foundations for Evidence-Based Policymaking Act of 2018 requires agencies to “continually assess the coverage, quality, methods, consistency, effectiveness, independence, and balance of the portfolio of evaluations, policy research, and ongoing evaluation activities of the agency”[42]. A capacity assessment is an organizational requirement to assess the agency’s ability and infrastructure to generate and use evidence to better inform decisions and improve policies and services for its customers.

The capacity assessment must include:

- a list of the activities and operations of the agency that are currently being evaluated and analyzed;

- the extent to which the evaluations, research, and analysis efforts and related activities of the agency support the needs of various divisions within the agency;

- the extent to which the evaluation research and analysis efforts and related activities of the agency address an appropriate balance between needs related to organizational learning, ongoing program management, performance management, strategic management, interagency and private sector coordination, internal and external oversight, and accountability;

- the extent to which the agency uses methods and combinations of methods that are appropriate to agency divisions and the corresponding research questions being addressed, including an appropriate combination of formative and summative evaluation research and analysis approaches;

- the extent to which evaluation and research capacity is present within the agency to include personnel and agency processes for planning and implementing evaluation activities, disseminating best practices and findings, and incorporating employee views and feedback; and

- the extent to which the agency has the capacity to assist agency staff and program offices to develop the capacity to use evaluation research and analysis approaches and data in the day-to-day operations.[43]

The assessment provides decision-makers “with information needed to improve the agency’s ability to support the development and use of evaluation, coordinate and increase technical expertise available for evaluation and related research activities within the agency, and improve the quality of evaluations and knowledge of evaluation methodology and standards.”[44]

OPM’s capacity assessment focuses on 1) research, analysis, evaluation, and statistics activities undertaken by the agency; 2) the balance and appropriateness of OPM’s evidence activities; 3) the effectiveness of OPM’s evidence activities; and 4) agency capacity to generate and use evidence.

In assessing OPM’s research, analysis, statistics, and evaluation activities, the following dimensional attributes are considered:

- Coverage. Coverage considers what evidence practices are occurring, and where within the Agency they occur. The assessment also considers whether and to what extent evidence practices support Agency strategic goals and objectives, and if the evidence-practice is used for operational, management, and policy decision-making.

- Quality. Quality considers ethical, scientific integrity, and quality of data and evidence produced. Quality is specific to the evidence-practice being assessed, reflecting the standards, such as relevancy, accuracy, timeliness, credibility, objectivity, utility, integrity, and transparency.

- Method. Method references the techniques, systems, and processes used in evidence generation. Methods vary by evidence practice. However, appropriate and rigorous methodological approaches are systemic, empirically grounded, and best support the definitive answers to the questions under investigation

- Independence. Independence denotes that evidence practice and evidence-building activity are free from bias and inappropriate influence. Independence also considers internal and external oversight with identified accountabilities and controls.

- Effectiveness. Effectiveness indicates that evidence practice and evidence-building activity support the Agency’s intended outcome. Additionally, effectiveness considers the balance of organizational learning, program management, performance management, strategic decision-making, interagency, and private sector coordination.

Methodology

OPM collected or leveraged data from multiple sources to accomplish its objectives. These included:

- A review of each office’s current evidence activities. In FY 2020, OPM compiled a list of evidence activities that were planned or currently being conducted. In FY 2021, OPM updated the list to reflect new activities aligned to OPM’s new strategic plan and priorities.

- A review of each office’s staff working on evidence activities and their research, evaluation, analysis, and statistical capacity. In FY 2020, OPM developed a list of all staff who spent at least 25 percent of their time on evidence-related activities. This list includes names, position titles, their current evidence work and their evidence skills.

- GAO’s Results for Decision-making survey, conducted in FY 2020 as an organizational baseline for measuring coverage, quality, methods, independence, and effectiveness for some evidence activities and for assessing capacity for evidence generation and use. OPM used this survey as it provides a benchmark to compare OPM to the federal government overall. A total of 89 managers at OPM responded to the survey, for a weighted response rate of 66 percent. The survey findings should be interpreted with caution as the respondents of the survey may not be well-qualified to assess all dimensions of OPM’s evidence capacity.

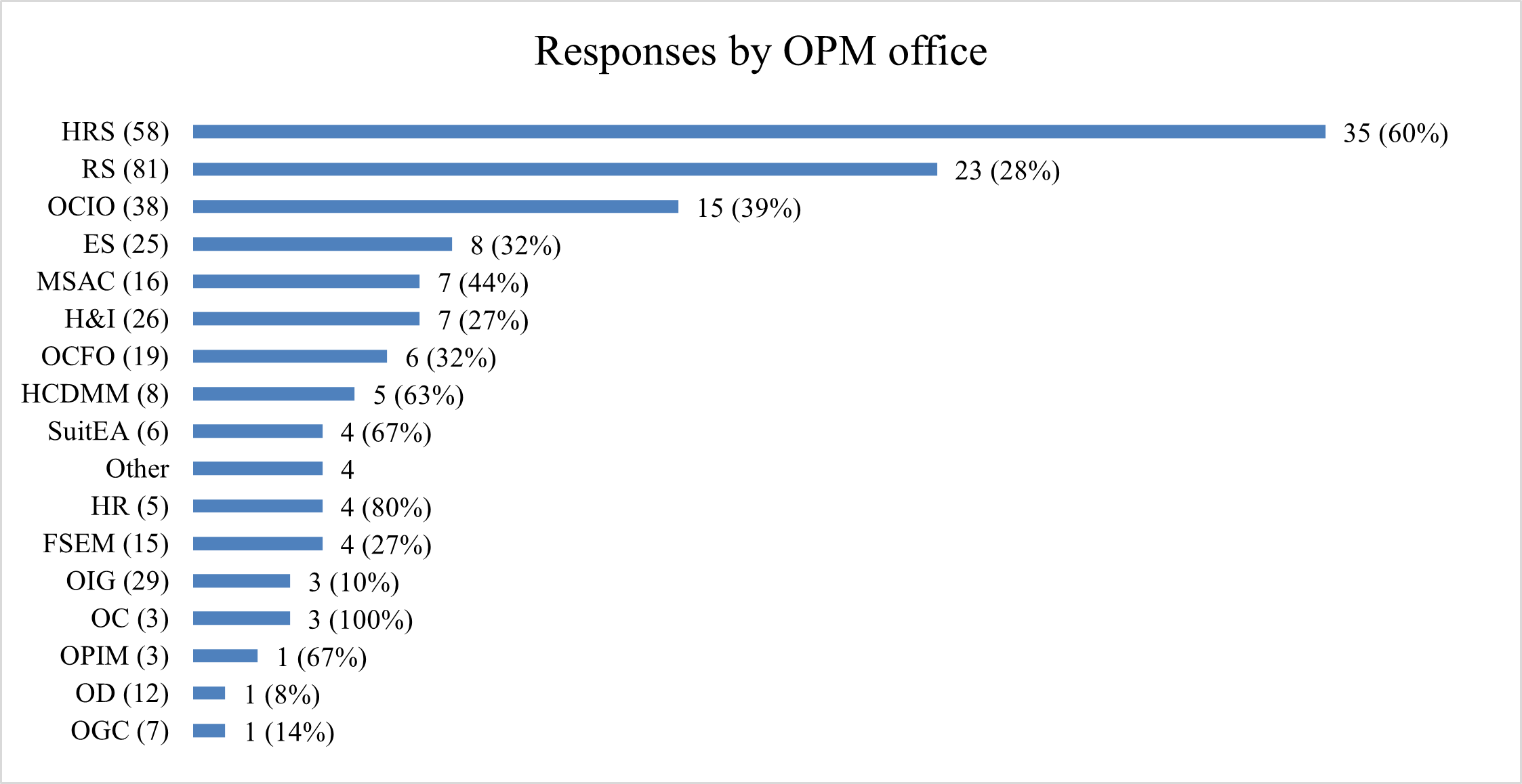

- An internal survey of OPM supervisors, managers, and senior leaders, which OPM conducted in FY 2021 to assess office-level evidence generation practices and address gaps in the GAO survey. This survey asked respondents to assess their office’s evidence generation practices, including the coverage, quality, methods, independence, and effectiveness for research, analysis, evaluation, and statistics. For these, the survey used similar question formulations and scales as the GAO survey to promote continuity. The survey also asked about office-level staffing models and staff capacity for each evidence type. A total of 132 OPM managers, supervisors, and senior leaders responded to the survey for a response rate of 38 percent, though only 85 of these indicated a need for evidence and responded to the evidence questions. Office-level response rates varied from 14 percent to 100 percent, thus some office-level findings should be treated with caution due to low response rates.

To design and implement these approaches, OPM engaged its Research Steering Committee, a group of key evidence generation leaders at OPM, as well as senior leaders, managers, and supervisors to provide input on the process and format of completing the capacity assessment and to respond to data requests and surveys.

To assess the types of evidence-building activities that are happening and where they are happening, OPM conducted a review of FY 2020-FY 2022 research analysis, statistical, and evaluation projects by office. The resulting inventory reflects the agency’s current ability to generate and use evidence to improve policies and services for its customers.

The purpose of the inventory is to identify and take stock of the different types of evidence that the agency is generating the different purposes that these projects serve.

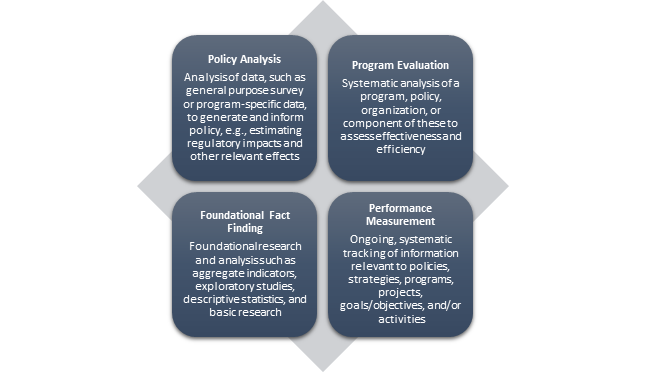

The different types of evidence as specified by the Office of Management and Budget include foundational fact finding, policy analysis, performance management, and evaluation, as detailed below.

The evidence activities were classified by implementing office and type (excepting performance measurement, which was not covered in the review).

OPM will use the inventory to identify opportunities to continue to build and use evidence that will support the agency’s achievement of its FY 2022-2026 strategic goals. Further, the inventory will help the agency to identify opportunities for intra-agency collaboration and cross-cutting learning and evidence-building activities.

Inventory of OPM’s Research, Analysis, Statistics, and Evaluation Activities

| Office | Focus Area | Activity | Type |

|---|---|---|---|

| Human Resource Solutions | Workforce Planning and Reshaping | Organization and Workload Analysis for External Agency. How many and what type of employees are needed to meet mission demands? The purpose is to inform resource decisions (that is, staffing plans); to improve organizational structure and position management for agencies who are OPM’s customers. | Foundational fact finding |

| Human Resource Solutions | Leadership and Workforce Development Assessment | Federal Workforce Competency Initiative (formerly MOSAIC Refresh). What are the critical general competencies and tasks required for federal occupational series? The purpose is to identify future HRS needs and to strengthen policy and classification work. | Foundational fact finding |

| Human Resource Solutions | Performance Management and Strategy | OPM HR Specialist Performance Management Element and Standards Project. Can we standardize the performance elements and standards for employees in core support positions (starting with the 201 series)? The purpose is to standardize performance elements and standards for these core support positions that agencies can implement in their workforce. | Policy analysis |

| Human Resource Solutions | Performance Management and Strategy | OPM Continuous Performance Feedback in a Virtual Environment. What are best practices for the future of work? The findings will be used to provide a guide/resource to assist federal agencies in managing performance in a virtual remote work environment. | Foundational fact finding |

| Human Resource Solutions | Organizational Assessment | Program Evaluation of an External Agency Scholarship Program. To what extent is the Program successful in training, recruiting, and retaining graduates? The purpose is to identify program efficacy, make programmatic changes and to prepare the external agency for Congressional inquiries, as requested. | Evaluation |

| Employee Services | Strategic Workforce Planning | Foresight Study. What is the future of work and how can we identify emerging skills? How might technology and other factors influence how the workforce accomplishes their mission? The ongoing data gathering will be used to inform policy and build a long-term strategic plan for federal agencies. | Policy Analysis |

| Employee Services | Strategic Workforce Planning | Federal Workforce Priorities Report. What should the priorities be for the workforce across the federal government? The findings will be used to set priorities for agencies throughout the federal government. | Policy Analysis |

| Employee Services | Strategic Workforce Planning | CHCO Manager Satisfaction Survey. What barriers are occurring in the hiring process? The ongoing survey findings are used for agency measures, HRStat reviews, and to improve agency hiring processes. | Foundational Fact Finding |

| Employee Services | Strategic Workforce Planning | Workforce Dashboards. How are agencies performing on key human capital metrics? The findings will be used to support agencies on improving key human capital measures. | Foundational Fact Finding |

| Employee Services | Strategic Workforce Planning | Mission Critical Occupations Resources/Time-to-Hire Analysis. How are agencies doing on improving time to hire and closing staffing gaps? The findings will be used to analyze agencies’ efforts in reducing time-to-hire and closing staffing gaps. | Foundational Fact Finding |

| Employee Services | Strategic Workforce Planning | Closing Skill Gaps/Multi-Factor Model Analysis. How are agencies doing in mitigating agency-specific and government-wide skills gaps? The findings will be used to close skill gaps and support OPM's efforts to remove strategic human capital management from the GAO high risk list. | Foundational Fact Finding |

| Employee Services | Work-Life/ Wellness/ Telework | Telework Report to Congress. What is the state of telework in the federal government? The purpose is to collect information on telework eligibility, telework participation, frequency of telework participation, agency methods for gathering telework data, progress in setting and meeting participation and outcome goals, agency management efforts to promote telework, and best practices in agency telework programs. | Foundational Fact Finding |

| Employee Services | Work-Life/ Wellness/ Telework | WellCheck Data Call Survey. How are agencies doing in implementing workplace health and wellness strategies? OPM offers this online assessment to federal agencies. The survey is used to provide agencies with health and wellness program benchmarking data. | Foundational Fact Finding |

| Employee Services | Work-Life/ Wellness/ Telework | Child Care Subsidy Programs Interviews with Federal Dependent Care Coordinators. What support do agencies needs from OPM regarding the childcare subsidy program? The purpose is to help inform OPM of the support agencies need for the Federal Child Care Subsidy Program and inform updates to Federal Care guidance. | Foundational Fact Finding |

| Employee Services | Senior Executive Services | SES Exit Survey. Why do executives leave the federal government? The survey offers an opportunity for executives to provide candid feedback about their work experience and is used to provide insights on SES experiences from exiting SES members. | Foundational Fact Finding |

| Employee Services | Senior Executive Services | SES Onboarding Survey. How is the work experience for newly onboarded executives in the federal government? The survey offers an opportunity for executives to provide candid feedback about their work experience and is used to provide insights on experiences from entering SES members. | Foundational Fact Finding |

| Employee Services | Senior Executive Services | Executive Rotations Analysis. What percent of SES members complete a rotation? The goal is to verify fulfillment of the annual government-wide goal of 15 percent of SES members completing rotations and provide supporting guidance and resources as applicable. | Foundational Fact Finding |

| Employee Services |

Leadership Development |

President's Management Council (PMC) Interagency Rotation Program (IRP) Program Evaluation. Is the PMC IRP meeting its intended goal and are participants satisfied? The purpose is to assess the success of the program in meeting outlined goals and to use the feedback provided to make changes to program implementation. | Evaluation |

| Employee Services | Leadership Development | Federal Coaching Evaluation Open Survey. Do agencies and departments have coaches for employees and if so, how are these coaching experiences for employees? The survey provides information to agencies on employee coaching experiences. | Foundational Fact Finding |

| Employee Services | Pay and Leave | Annual Report of the President’s Pay Agent. What are the methods for determining GS locality pay comparability? The purpose of this report is to set pay for General Schedule positions. | Policy Analysis |

| Employee Services | Pay and Leave | Reviews of Federal Wage System Wage Area Geographic Boundaries. What are the methods for determining local prevailing wage levels? The purpose of this analysis is to set wage levels for Federal Wage System positions. | Policy Analysis |

| Employee Services | Pay and Leave | Physicians Comparability Allowance Report to Congress. What is the state of agency use of physician comparability allowances to address physician recruitment and retention problems? The purpose is to develop guidance for physician allowances. | Policy Analysis |

| Employee Services | Pay and Leave | Gender Pay Gap Analysis. What is the size of the gender pay gap? Findings will be used to compare the gender pay gap over time and based on various factors to identify reasons for the gap and possible policy guidance. | Foundational Fact Finding |

| Employee Services | Pay and Leave | Recruitment, Relocation, and Retention Incentive Waiver Requests. Is there evidence of recruitment or retention problems for the position(s) involved? The findings are used to support/justify waiver requests. | Foundational Fact Finding |

| Employee Services | Pay and Leave | Annual Student Loan Repayment Program Report to Congress. What is the state of agency use of the student loan repayment program to address recruitment and retention problems? The findings are used to provide information for student loan policy. | Policy Analysis |

| Employee Services | Survey Analysis | Federal Employee Benefit Survey. What is the importance, adequacy and value of federal employee benefits? Do federal employees believe that the available benefits meet their needs? Do federal employees understand the flexibilities and benefits available to them? The results of the semi-annual survey have been used to trend benefit program ratings over time and assess the employee perspective when it comes to the adequacy, value, and importance of each program. | Foundational Fact Finding |

| Employee Services | Survey Analysis | OPM Federal Employee Viewpoint Survey (FEVS). What are federal employees’ perceptions of their work experience and agency leadership? The results of this annual survey provide insight into employee engagement and agency culture. | Foundational Fact Finding |

| Employee Services | Survey Analysis | OPM FEVS Modernization and Improvement. How can the decades old OPM FEVS be improved to align with the research literature and practice to (1) provide federal agencies, and OPM program/policy offices with data that are valid and reliable, address timely, responsive, and actionable topics, (2) help promote survey processes supportive of continuous improvement and substantive change actions, and (3) allow results to be shared quickly and in the most consumable form? The findings will be used to improve the OPM FEVS instrument to shape federal policy, program evaluations, federal workforce and workplace development strategies, agency oversight functions, and GAO engagements. | Foundational Fact Finding |

| Employee Services | Survey Analysis | Inclusivity Culture Quotient (ICQ). Can we advance understanding of workplace inclusion and diversity in the federal government by furthering development of a contemporary, theory-grounded measure of inclusivity and diversity? This research seeks to develop a contemporary, theory-grounded measure of inclusivity and diversity that can be used as the foundation for policy advancements. | Foundational Fact Finding |

| Employee Services | Survey Analysis | Human Capital Framework Assessment. How can we leverage the OPM FEVS to provide useful benchmark data to agency leadership, and oversight data to OPM? The findings will allow federal agency leadership to benchmark results of internal Framework activities and engage in interagency learning regarding implementable practices for effectively managing talent. | Foundational Fact Finding |

| Human Capital Data Management and Modernization | Data Support and Analysis | Federal Executive Branch Characteristics Publication. What are the current characteristics of the federal workforce (total employment, hiring, separations, average salary, average age, and average length of service by agency, location, ethnicity, race, gender, and education level)? The findings are used to inform workforce planning. | Foundational Fact Finding |

| Human Capital Data Management and Modernization | Data Support and Analysis | Machine Learning Models. What are expected trends in retirements? The findings will be used to determine if government can expect a wave of retirements in the next two years. | Foundational Fact Finding |

| Human Capital Data Management and Modernization | Data Support and Analysis | Retirement Statistics & Trend Analysis. What is the total number of retirees, average age, and average length of service at retirement for the federal civilian workforce? The purpose is to provides the public with annual data surrounding federal civilian retirement. | Foundational Fact Finding |

| Human Capital Data Management and Modernization | Data Support and Analysis | Dynamic Workforce Dashboards. What are agency and government workforce trends? These dashboards bring together disparate data sources to provide insights on the state of the workforce. | Foundational Fact Finding |

| Human Capital Data Management and Modernization | Data Support and Analysis | DEIA-focused Dashboards. How diverse, equitable, inclusive, and accessible are federal agencies? These dashboards bring together demographic, survey, and agency data to support monitoring of DEIA executive orders and other administration priorities. | Foundational Fact Finding |

|

Human Capital Data Management and Modernization |

Data Support and Analysis

|

Telework Utilization Analysis. What are current utilization rates of telework? This analysis informs return to work planning. |

Foundational Fact Finding

|

|

Human Capital Data Management and Modernization |

Data Support and Analysis |

Equity Assessments. How do financial readiness and health plan selection vary by demographic group? These findings will inform the OPM equity assessments around financial readiness for retirement and Federal Employee Health Benefit plan selection. |

Foundational Fact Finding

|

|

Human Capital Data Management and Modernization |

Data Support and Analysis |

Retirement Dashboard. What are trends in retirement eligibility, types of retirement, and retirement demographics? The dashboard will be used to inform retirement services actions. |

Foundational Fact Finding

|

|

Merit Systems Accountability and Compliance |

Agency Compliance and Evaluation |

Human Capital Management Evaluations. Are agencies operating merit systems effectively, efficiently, and in compliance with regulations and veterans' preference law? The purpose of these evaluations is to (1) prescribe required corrective action; (2) recommend actions to improve effectiveness, efficiency, refer possible prohibited personnel practices and merit system violations to Office of Special Counsel; and (3) identify best practices to share with other agencies. |

Foundational Fact Finding |

|

Merit Systems Accountability and Compliance |

Combined Federal Campaign (CFC) |

Three-Year Analysis of CFC Transactions and Applications. What is donor retention in CFC program? What is charity retention? The purpose of this analysis is to improve CFC marketing and charity recruitment. |

Foundational Fact Finding |

|

Suitability Executive Agent |

|

Full Program Reviews and Follow-up Reviews of Federal Agencies' Suitability Programs. To what extent is the suitability program of the agency achieving outcomes consistent with its delegated responsibilities? The findings identify areas of accomplishment and areas for improvement. A follow-up evaluation is conducted within one year of program evaluation to determine progress. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Health Systems Analysis |

Pharmacy Cost and Use Data Analysis. What are the patterns of pharmacy usage and cost under FEHB? The findings will be used for FEHB program administration. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Health Systems Analysis |

Formulary Analysis. What is the impact of formularies on FEHB program pharmacy benefits? The findings will be used for FEHB program administration. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Enrollment and Member Services |

Cost-Benefit Analysis for FEHB Central Enrollment Program. How do the cost of today’s FEHB enrollment and reconciliation process compare to the anticipated costs in a centralized environment? The findings will be used to inform program analysis, process improvements, and develop an acquisition strategy. |

Policy Analysis |

|

Healthcare and Insurance |

Enrollment and Member Services |

Family Member Eligibility Educational Toolkits Process Evaluation. How are family member eligibility toolkits used and perceived? The findings will be used to assess how effective the tools are in educating and influencing enrollee behaviors. |

Evaluation |

|

Healthcare and Insurance |

Enrollment and Member Services |

Enrollment Process Improvements Core Group. What is the current state of FEHB enrollment processing? How can we transform enrollment processing? The purpose is to identify deficiencies and duplication in current transaction processing and to identify and develop opportunities for centralizing the FEHB enrollment process. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Enrollment and Member Services |

Analysis of employee experiences and understanding of FEHB policy. How do federal employees learn about FEHB policy? How do they navigate enrollment decisions when HR is not on-site? What is their exposure to FEHB policy? The purpose is to assess the effectiveness of current resources and identify new opportunities to effectively educate federal employees on FEHB policy. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Enrollment and Member Services |

FEHB Family Member Audit. What percent of family members enrolled in FEHB are ineligible? The findings will be used to inform program analysis, for process improvement, to develop acquisition strategy, and to identify fraud, waste, and abuse. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Enrollment and Member Services |

FEHB Enrollment Data Analysis. What is the quality of FEHB electronic enrollment transaction data? Can we use the data to identify opportunities to refine FEHB enrollment data processes? The purpose is to analyze the quality of FEHB electronic enrollment transaction data for opportunities to refine enrollment data processes and the findings will be used to inform program analysis and process improvement. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Program Analysis and Development |

Automated Data Collection. How are FEHB carriers performing on certain topics and areas identified in the annual Call Letter? The Automated Data Collection collects information from FEHB carriers on topics and areas identified in the annual Call Letter to analyze adherence and performance related to health insurance. The collection informs future policy positions, content of future Call Letters and identifies areas of needed improvement for FEHB. |

Foundational Fact Finding |

|

Healthcare and Insurance |

Systems Development and Implementation |

FEHB Master Enrollment Index. Can a more centralized enrollment information help streamline FEHB administration and improve carrier enrollment processing? The purpose of the index is to improve FEHB enrollment administration and reporting. |

Foundational Fact Finding |

|

Office of the Chief Information Officer |

Information Technology |

IT Assessment. How can OCIO transform to effectively meet OPM's mission? The purpose of this analysis is to research project prioritization, funding allocations, process improvements, IT architecture improvements, staffing changes, and training. |

Foundational Fact Finding |

|

Office of the Chief Information Officer |

Information Technology |

IT Architecture Review. What IT systems exist throughout OPM and what are their characteristics and interconnections? The purpose for this analysis is to research how OPM OCIO will staff to support technologies in use across all of OPM. |

Foundational Fact Finding |

|

Office of the Chief Financial Officer |

Financial Systems and Operations |

Joint Data Management Strategy. How will the financial data structure comply to OPM's reporting mandates once financial systems are migrated to Federal Shared Service Providers? This analysis will identify how the financial data structure will comply to OPM's reporting mandates once financial systems are migrated to Federal Shared Service Providers. |

Foundational Fact Finding |

|

Office of Procurement Operations |

Acquisition Policy and Innovation |

Annual Value Engineering Efforts. Have OPM’s value engineering efforts reduced program and acquisition costs, improved performance, enhanced quality, or fostered the use of innovation? This annual report provides the fiscal year results of using Value Engineering annually to OMB’s Administrator for Federal Procurement Policy. |

Foundational Fact Finding |

|

Office of Procurement Operations |

Acquisition Policy and Innovation |

Contracting Officer's Representative Development Program Survey. How can OPO assist contracting officer representatives (CORs) with performing their duties? The survey results will identify opportunities for OPO to assist Contracting Officer's Representatives with performing their duties. |

Foundational Fact Finding |

|

Office of Procurement Operations |

Acquisition Policy and Innovation |

Requirements Design Studio. How can OPM make the requirements development process more efficient and effective? The purpose of this analysis is to research innovative methods and processes, and to develop and evaluate tools to improve the quality of procurement requirement information. |

Foundational Fact Finding |

|

OPM Human Resources |

Talent Development |

Competency Exploration Development and Readiness Tool. What are OPM’s agency-specific skill gaps and how is OPM doing in mitigating these gaps? The findings will be used to support OPM's talent development efforts to upskill and reskill its workforce in alignment with the NAPA report recommendation to restore the agency’s reputation for human capital leadership, expertise, and service by redirecting the internal culture and rebuilding internal staff capacity. |

Foundational Fact Finding |

|

OPM Human Resources |

Hiring Process |

FY 2021 Delegated Examining Evaluation: Accountability and Hiring Process. How effective and efficient is OPM’s internal hiring process from employee onboarding and new hire orientation? The findings will be used to make program and policy improvements related to OPM’s internal hiring process. |

Evaluation |

|

OPM Human Resources |

Talent Management |

Future of Work/Flexible Work Arrangements Survey. What were OPM employees’ experiences during the pandemic and what are their preferences for flexible work arrangements and schedules and employee supports? These findings will be used to inform the development of OPM’s Future of Work Plan and program enhancements that address employee feedback. |

Foundational Fact Finding |

|

OPM Human Resources |

Human Capital Analytics |

Human Capital Dashboard. What are trends in OPM demographics, hiring and attrition, and other human capital measures? This descriptive data provides OPM senior leadership with context to inform decision-making that impacts their organization’s workforce. |

Foundational Fact Finding |

|

OPM Human Resources |

Performance Management |

Internal Audits of the Performance Management System. How effective are OPM’s awards and recognition programs? This data will be used to develop baseline measures for OPM’s performance management program, such as timeliness of recognition, utilization of various award types, and employee satisfaction and to determine common strengths and opportunities for improvement. |

Foundational Fact Finding |

|

OPM Human Resources |

Employee Perceptions |

Exit Survey. How do exiting employees perceive OPM program/support areas and their work environment? These surveys are used to evaluate feedback on OPM and identify areas of improvement. |

Foundational Fact Finding |

Coverage and balance of evidence activities

To assess whether there is an appropriate balance of evidence activities to address diverse organizational needs, OPM reviewed the balance of types of evidence activities currently being conducted or planned. Additionally, it leveraged data from the OPM internal survey and GAO’s Managing for Results survey to examine the extent to which types of evidence were needed and available for organizational use.

Of the 63 evidence activities identified in the review, 52 (83 percent) were classified as foundational fact finding, 7 (11 percent) were classified as policy analysis, and just 4 (6 percent) were classified as evaluation. Performance measurement was not included in the review, but OPM has a robust performance measurement process for strategic plan priorities, though performance information may be less utilized at the OPM office level. OPM’s largest imbalance is the dearth of evaluations of any type occurring at the agency; OPM’s FY 2022 annual evaluation plan currently included just one outcome evaluation. This is a critical area targeted for improvement.

The GAO survey also assessed availability of various evidence types for programs at OPM as compared to the federal government. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding data availability.

| Item | OPM | Federal Government |

|---|---|---|

| I have access to the performance information I need to manage my program(s) | 69.7% | 63.1% |

| I have access to program evaluation(s) I need to manage my program(s) | 33.3% | 31.3% |

| To what extent do you have the following types of information available to you for the program(s) that you are involved with? Administrative data | 40.4% | 30.2% |

| To what extent do you have the following types of information available to you for the program(s) that you are involved with? Statistical data | 37.3% | 29.8% |

| To what extent do you have the following types of information available to you for the program(s) that you are involved with? Research and analysis | 26.0% | 21.6% |

OPM outperformed the government in availability of all evidence types. OPM managers were most likely to report having performance information to a great or very great extent, but availability of other data types was much lower, and suggests a need for more access to evidence to inform programs.

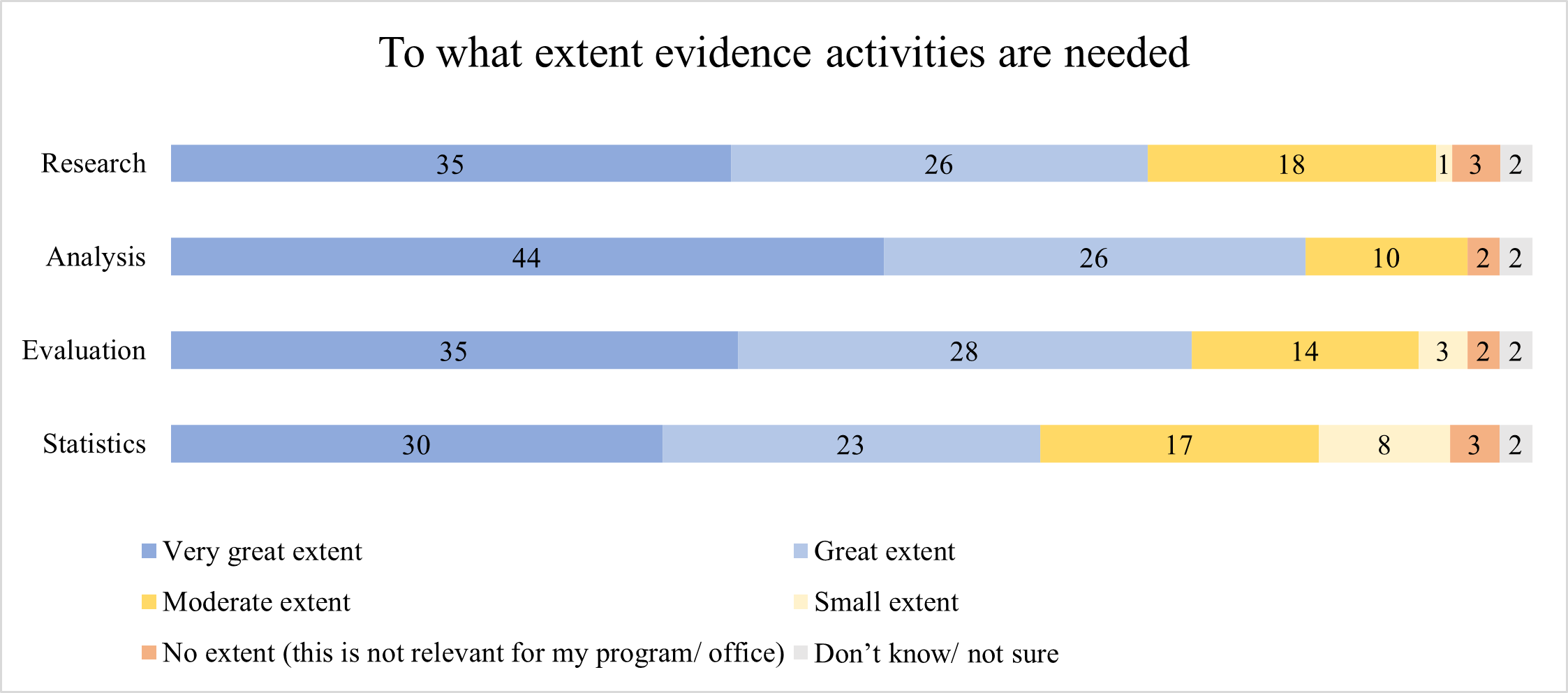

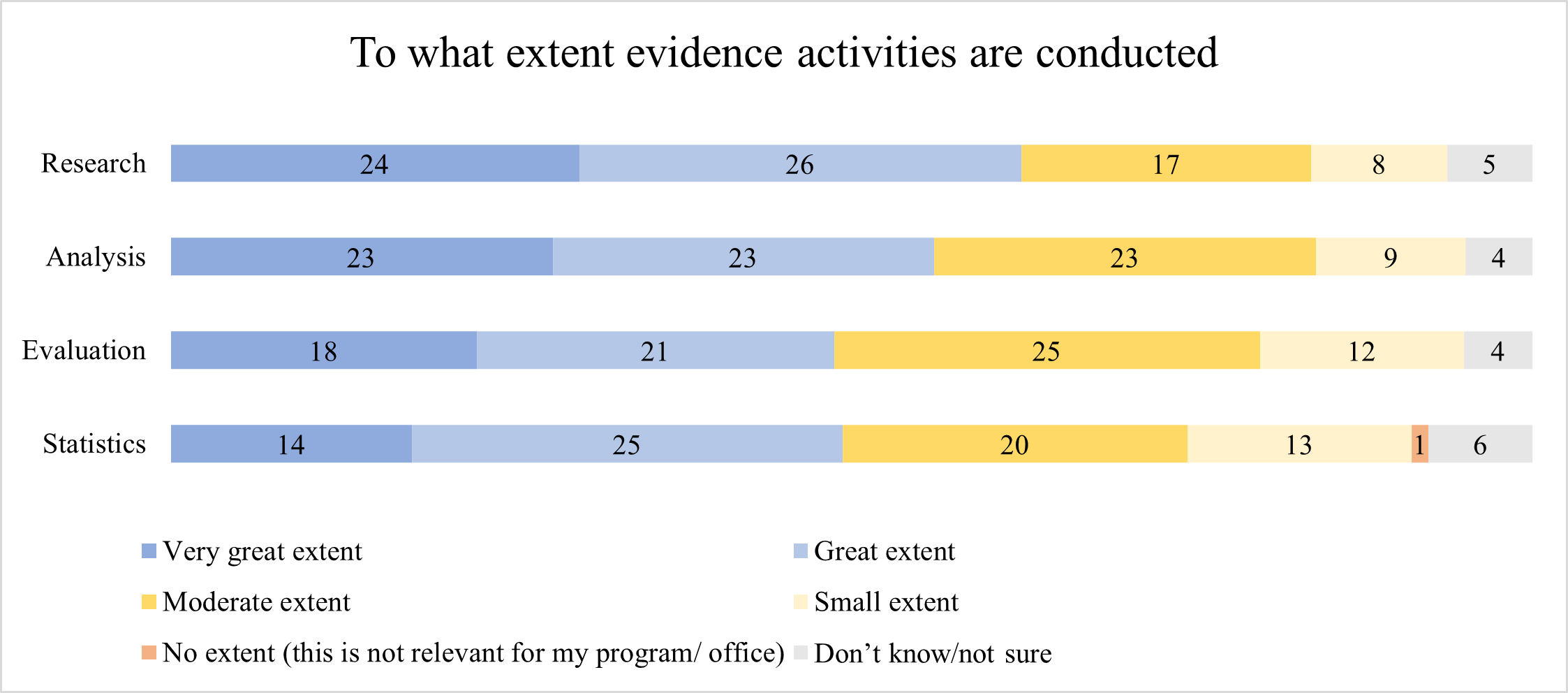

OPM’s internal survey asked respondents to assess the extent to which their offices needed and conducted or leveraged evidence activities, by each activity.

To what extent evidence activities are needed Image Details

To what extent evidence activities are conducted Image Details

OPM’s survey corroborates the findings of the GAO survey. The findings show that OPM managers, supervisors, and senior leaders feel that there is a large need for research, analysis, evaluation, and statistics in their office, but that evidence activities are conducted at a lesser extent than the need. This may result in the perception in the GAO survey that evidence is not available.

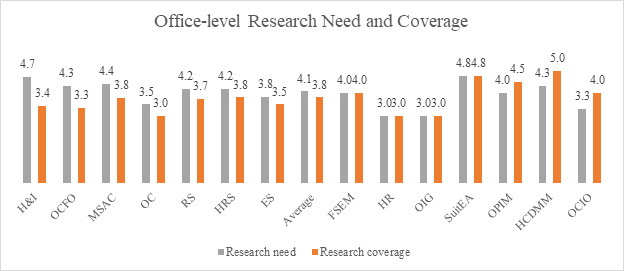

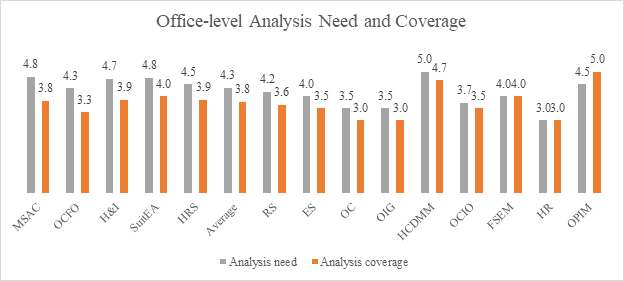

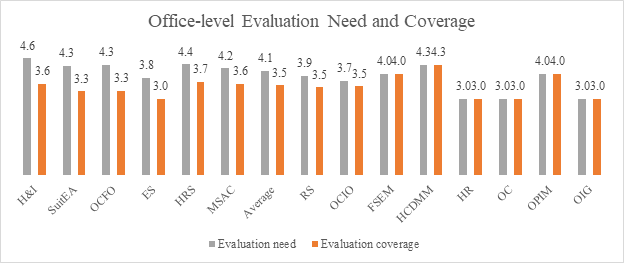

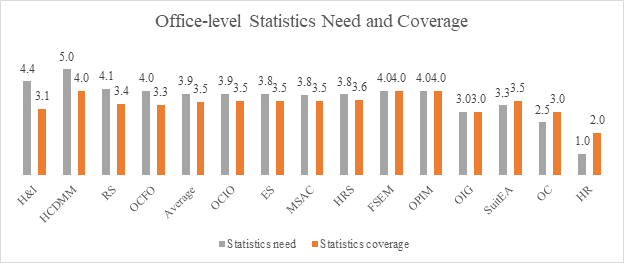

The office-level findings comparing need for evidence activities and coverage are included in Annex A. These findings show that both evidence need and coverage vary by office, but that on average the need exceeds the coverage.

Quality of evidence activities

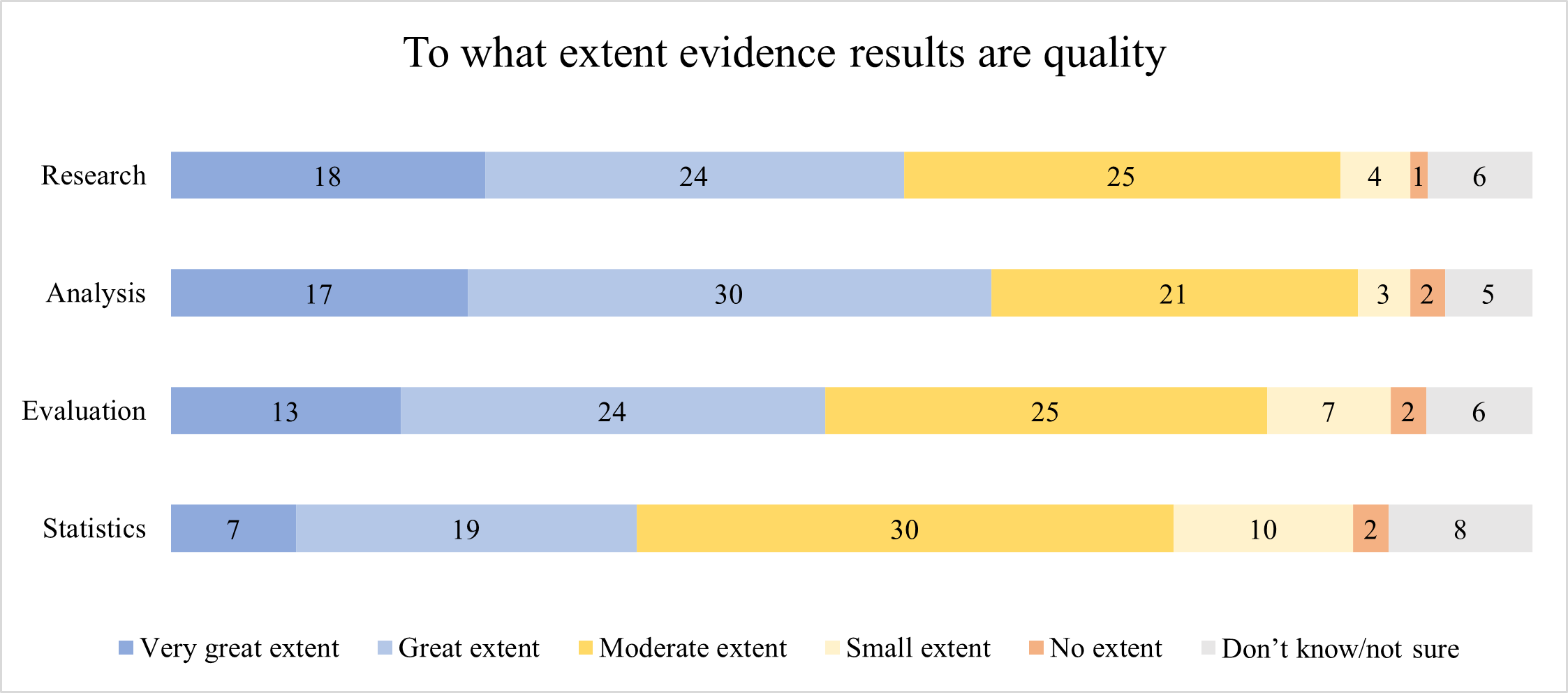

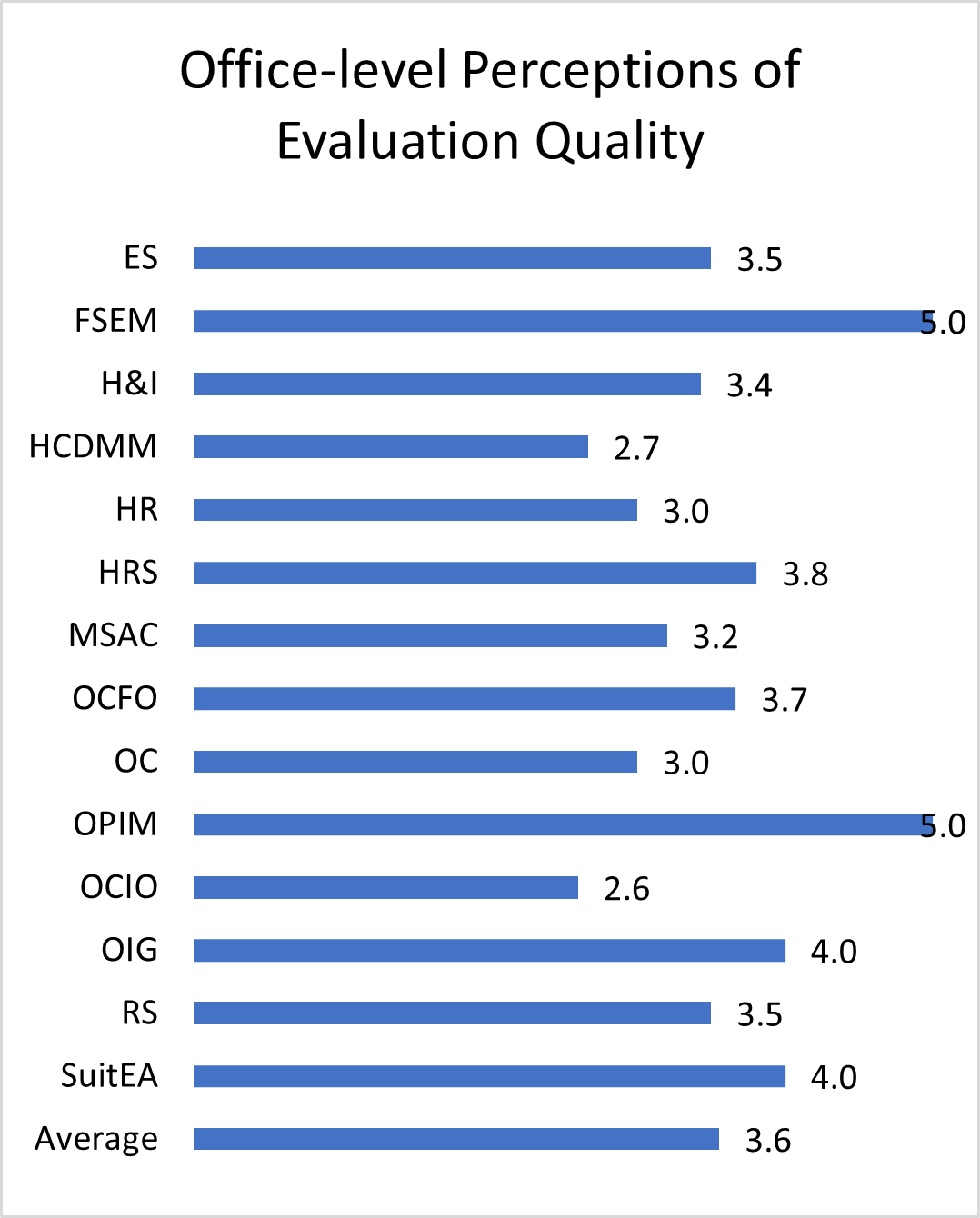

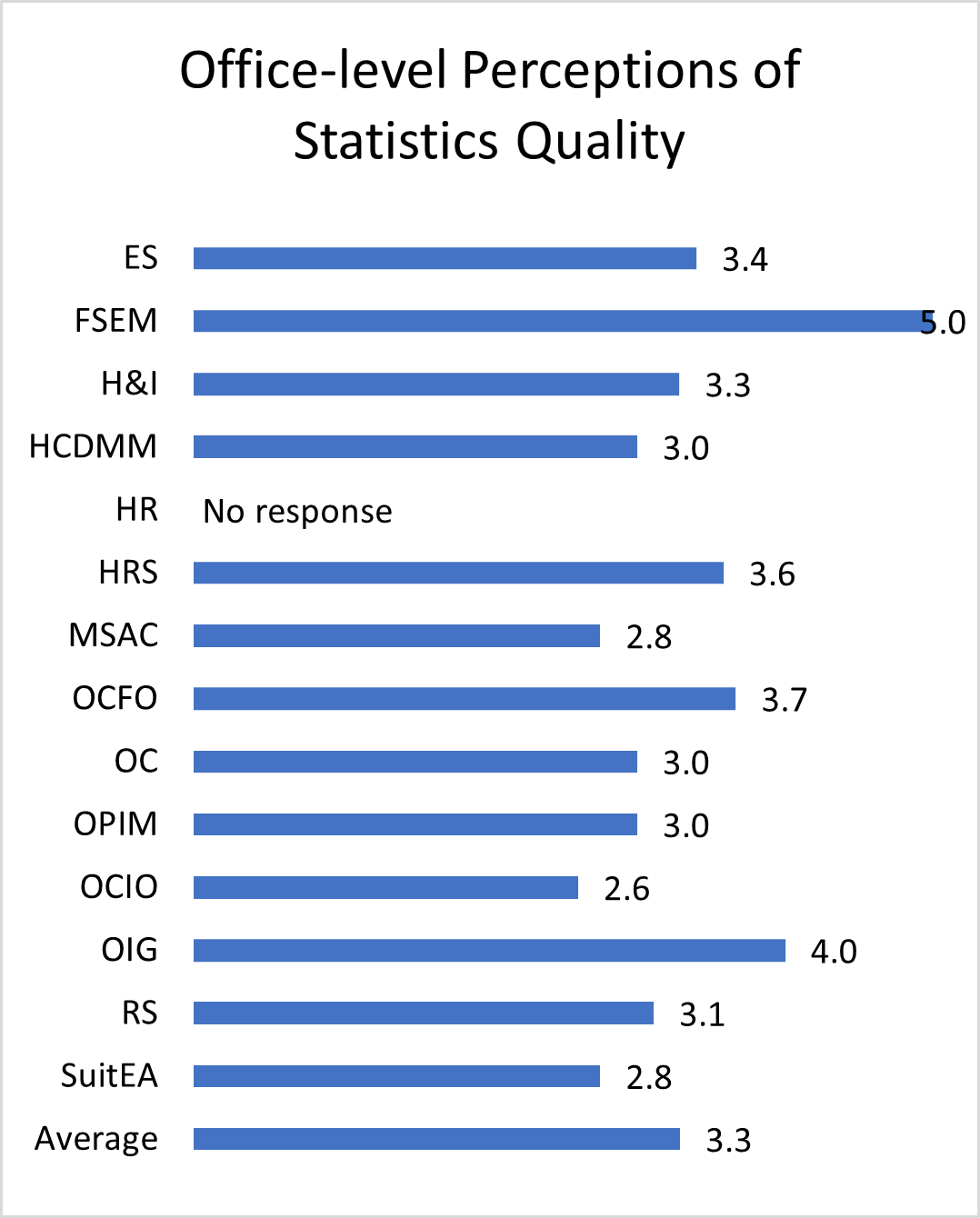

OPM is committed to processes and procedures to promote quality of evidence, including adherence to its published evaluation standards, privacy laws, and internal expert guidance. To assess quality of current evidence activities, OPM leveraged data from GAO’s Managing for Results survey to examine evaluation quality dimensions and OPM’s internal survey to assess perceptions of results quality for all evidence types.

The GAO survey did not explicitly address quality. OPM’s internal survey asked respondents to assess the extent to which they were confident in the quality of the results of their evidence activities, by each activity. While this does not cover all potential aspects of study quality, it does provide an initial assessment of OPM leaders’ views on evidence quality.

To what extent evidence results are quality Image Details

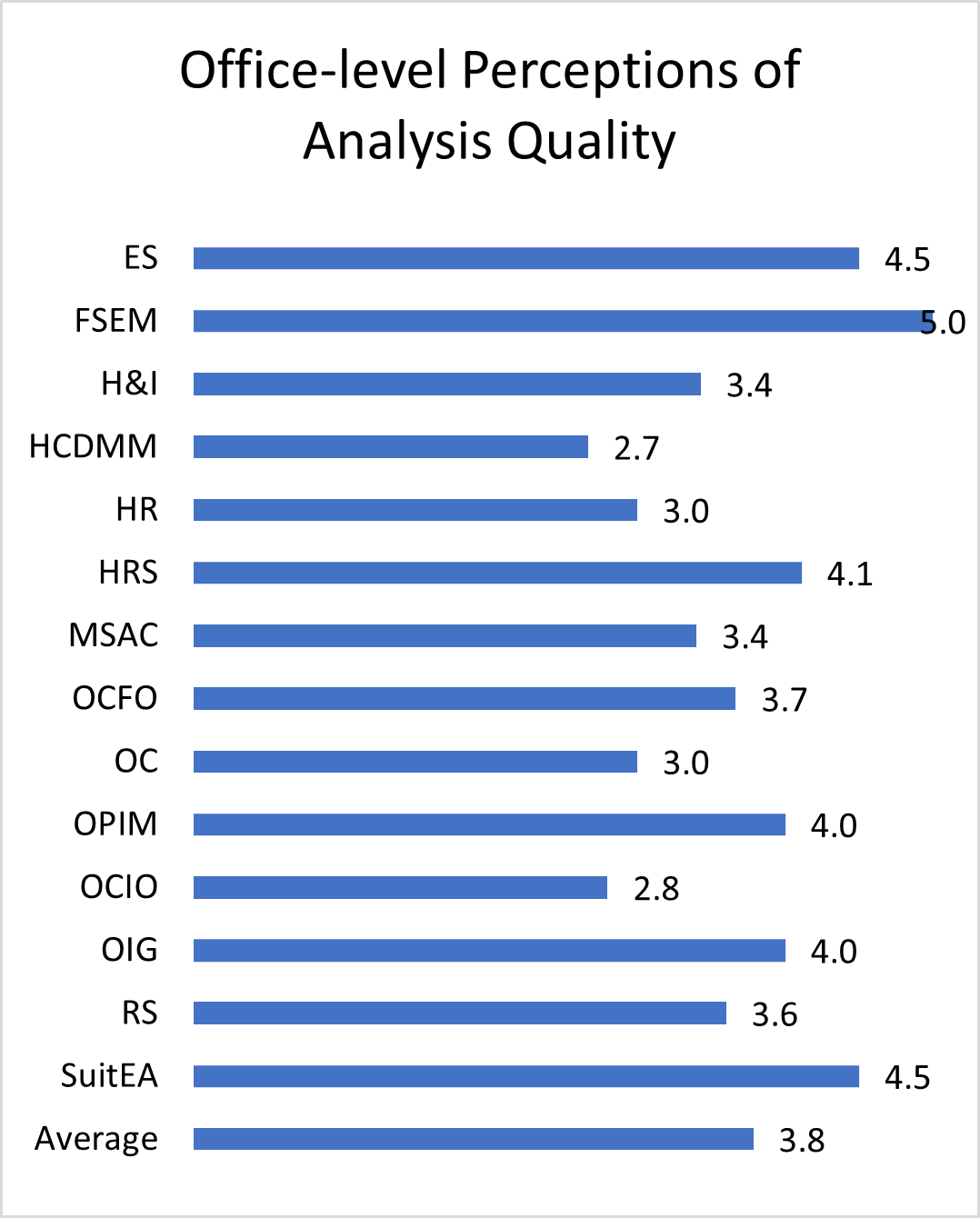

OPM managers’, supervisors’, and senior leaders’ perceptions of evidence quality varied by type of activity and were generally mixed. Respondents were least confident in the quality of statistical activities and most confident in the quality of OPM’s analysis results.

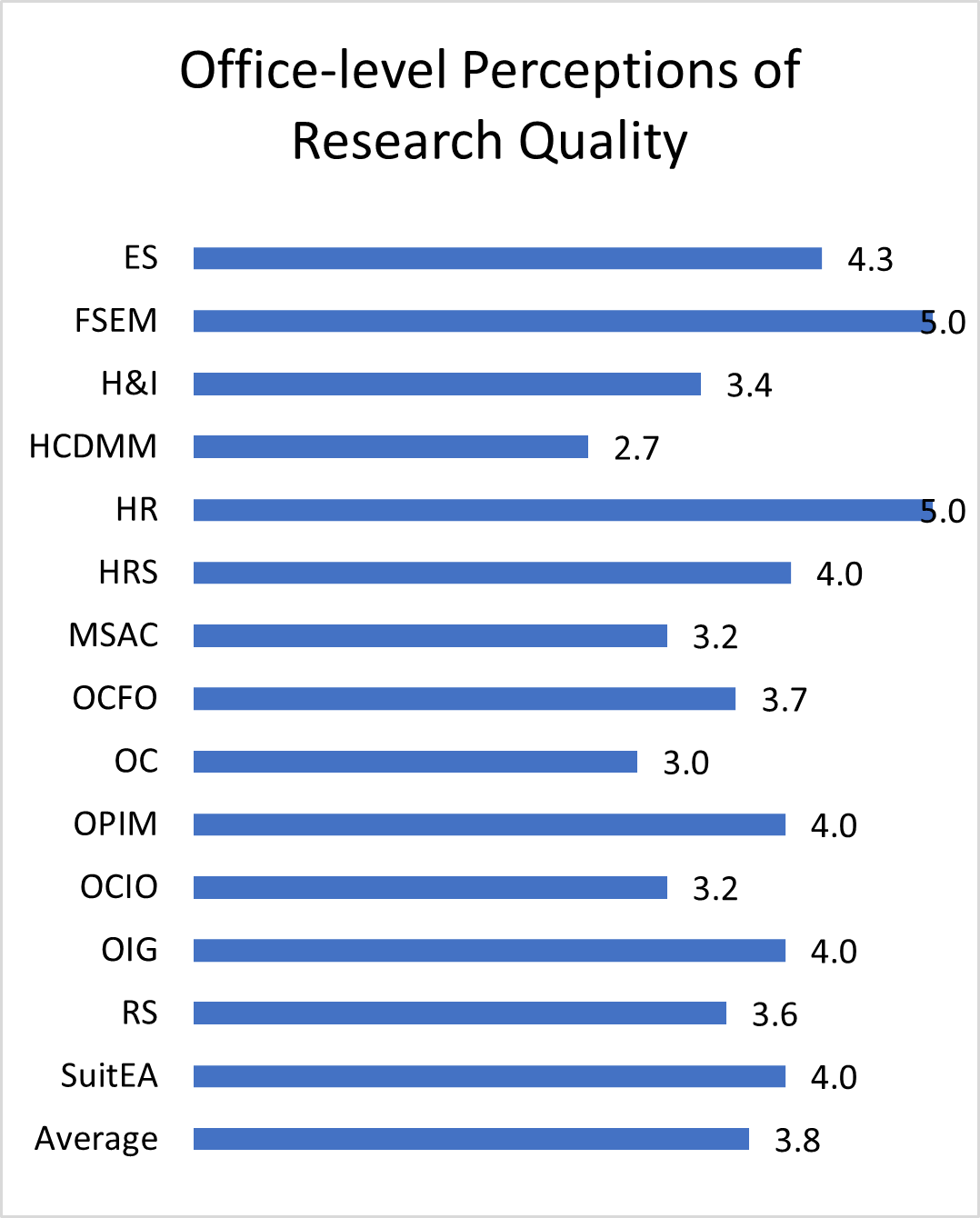

The office-level findings showing perceived quality for each evidence activity are included in Annex A.

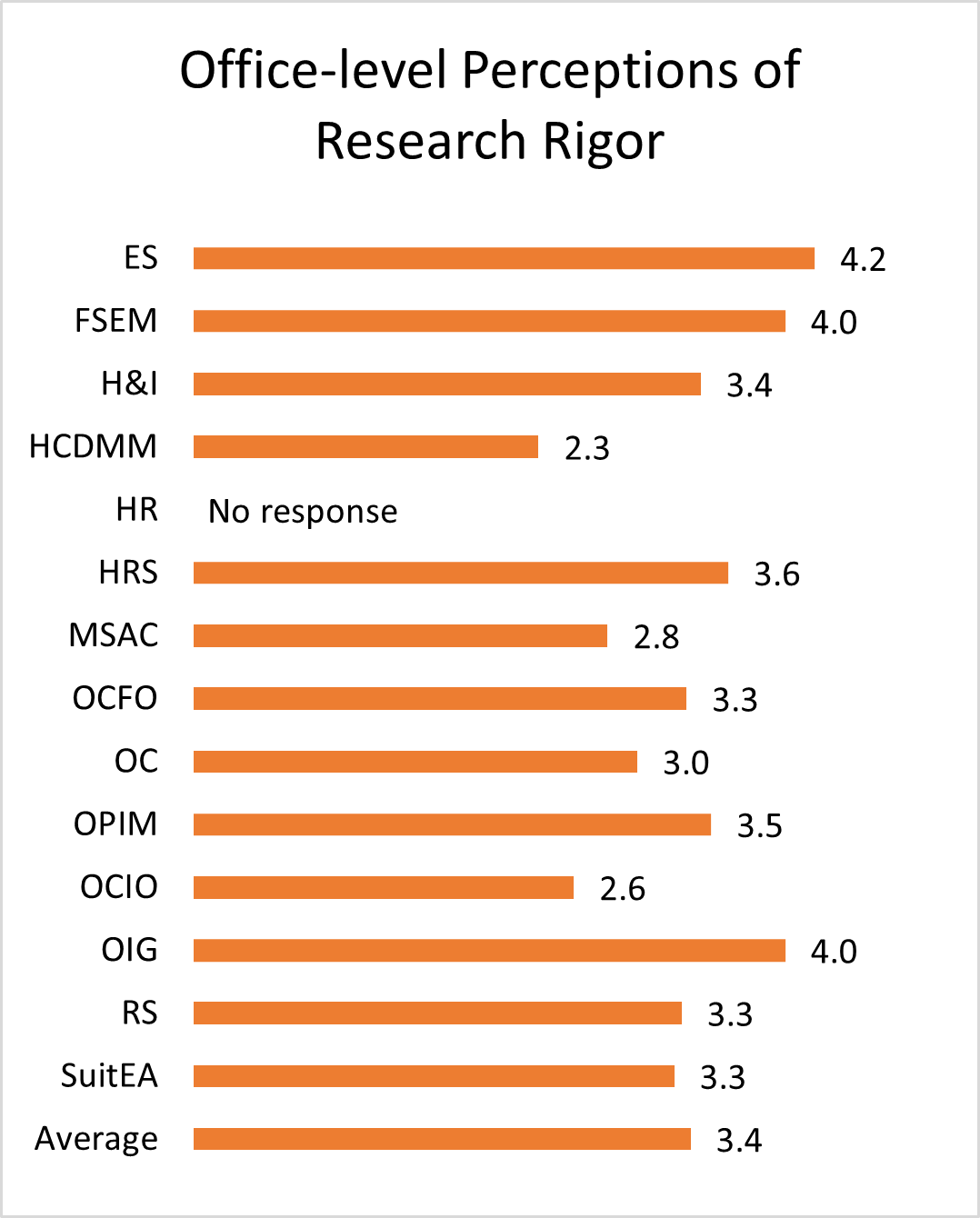

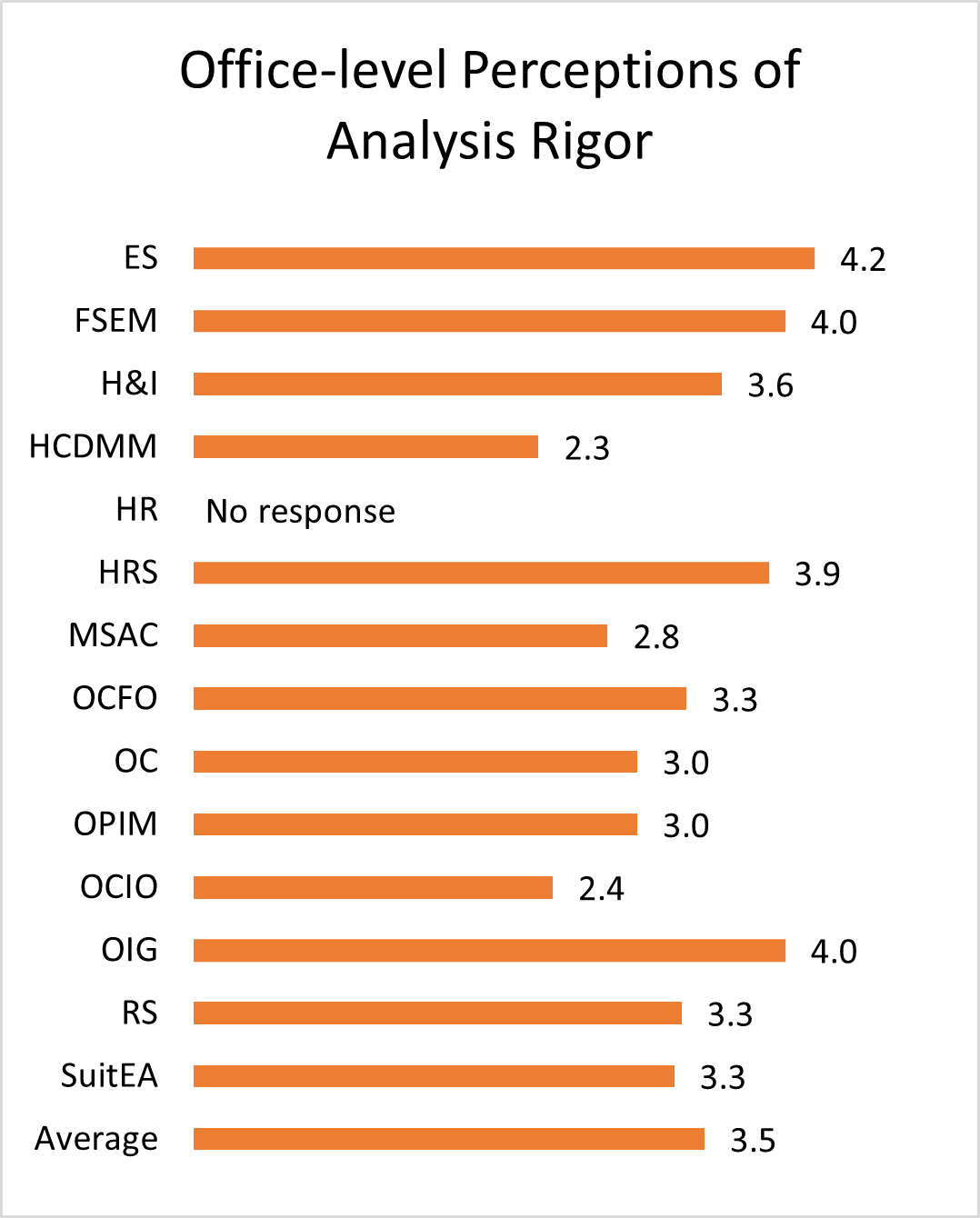

Appropriateness of methods

OPM is currently seeking to strengthen its methodological rigor through centralizing the oversight of research and evaluation within the Office of the Chief Financial Officer’s Planning, Performance, and Evaluation Group, under the purview of the Evaluation Officer. OPM is also instituting more standardized evidence reviews and cataloguing existing evidence on its knowledge management platforms. OPM is also developing a peer review process to further promote appropriateness and rigor of its research and evaluation.

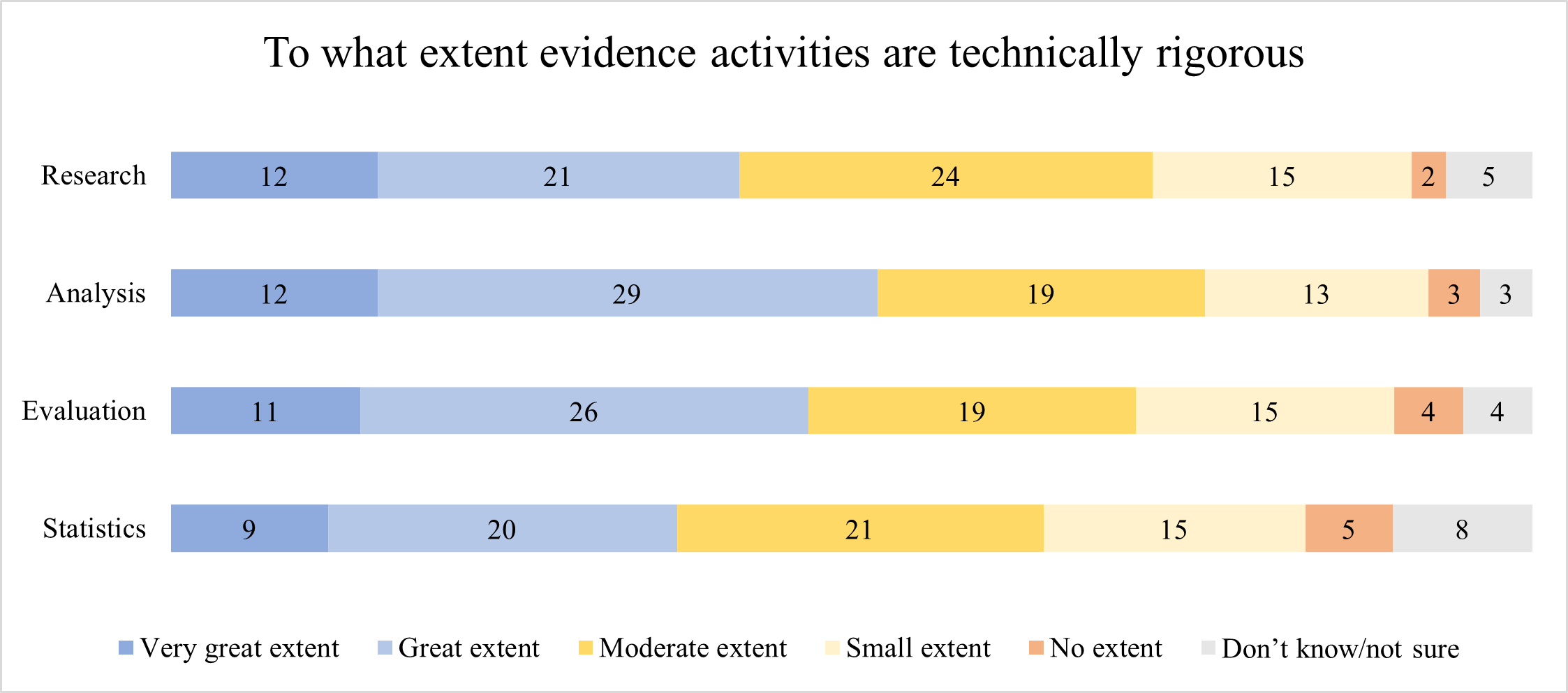

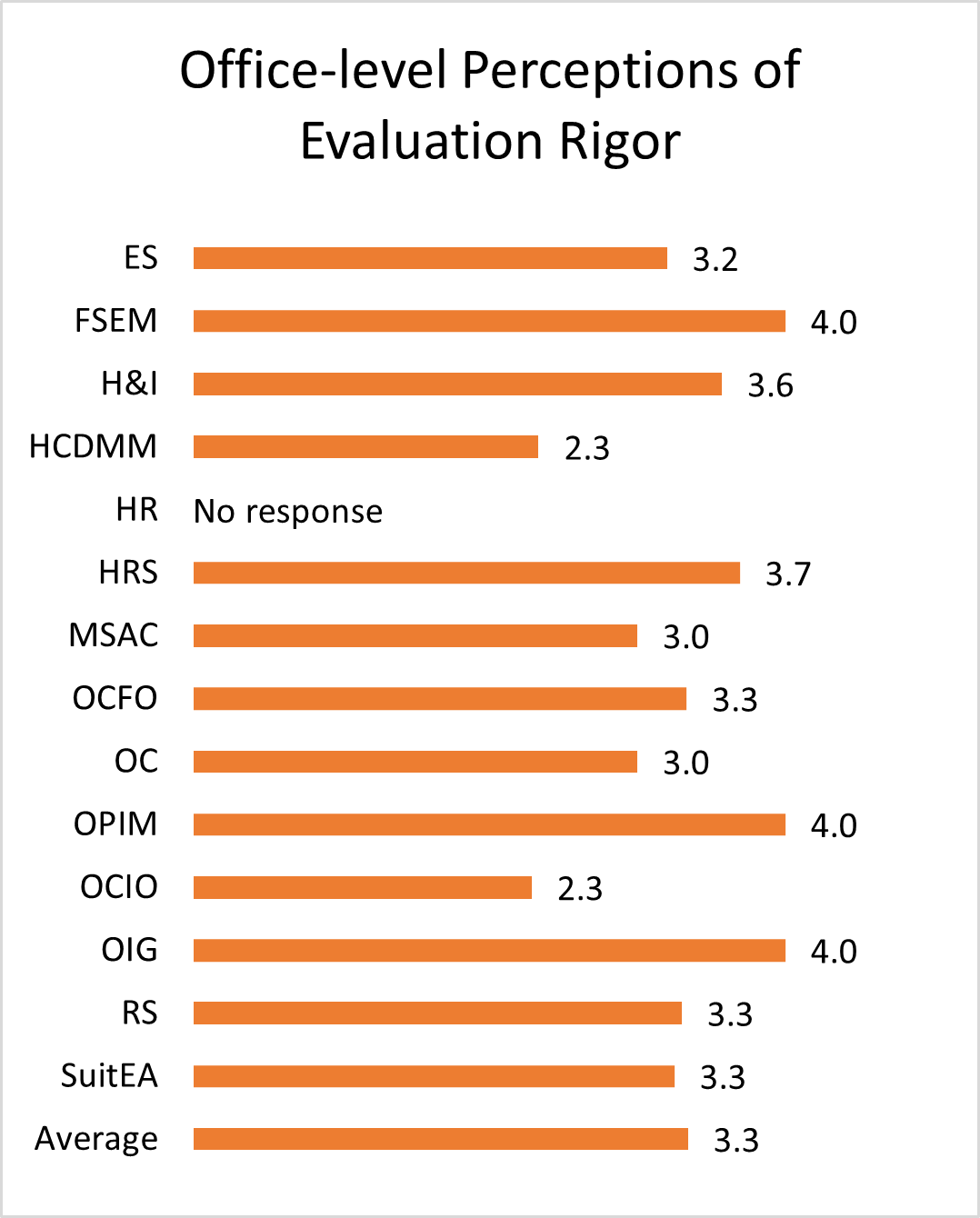

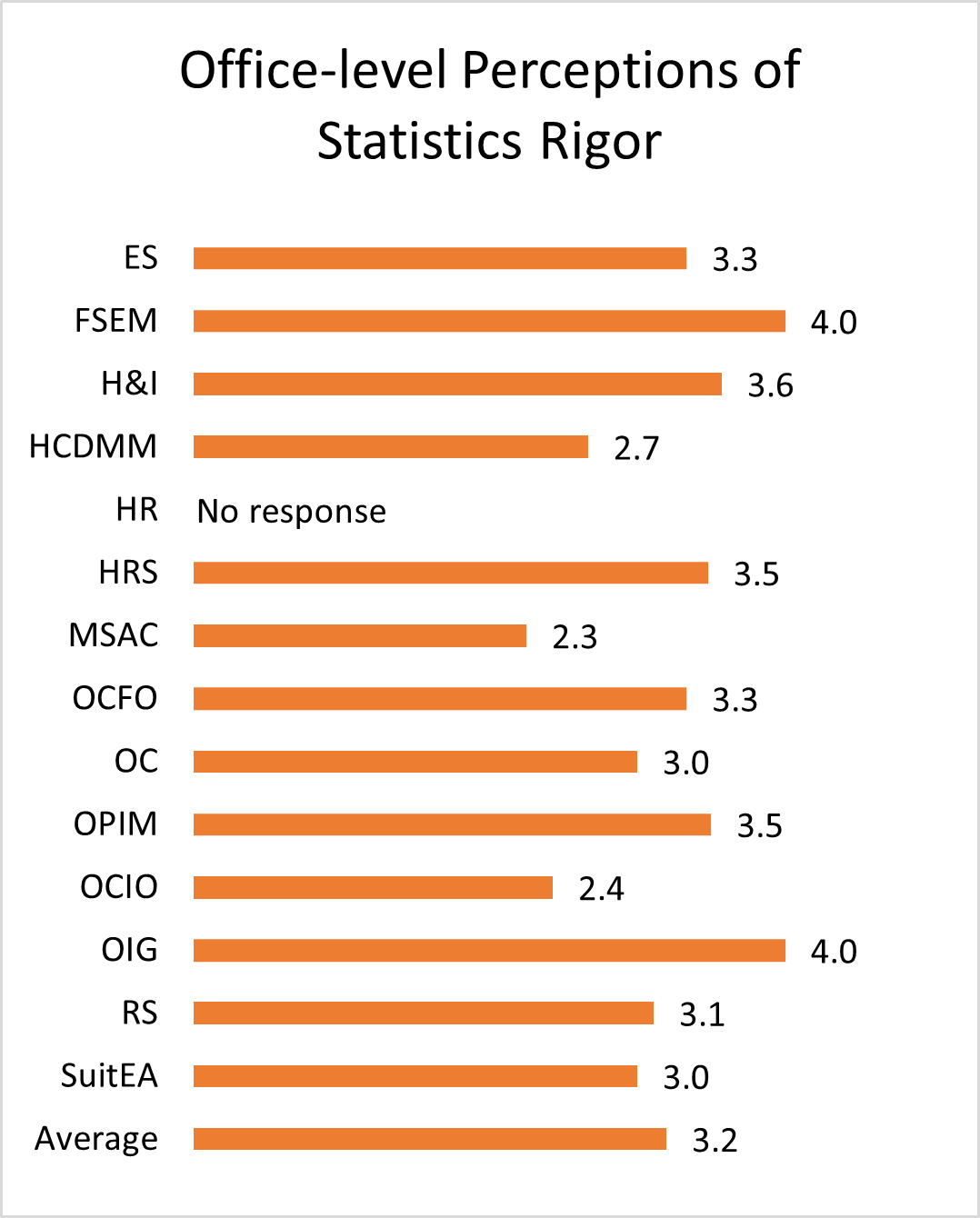

To assess the current state, OPM leveraged data from GAO’s Managing for Results survey to examine evaluation rigor and OPM’s internal survey to assess perceptions of rigor for all evidence types.

The GAO survey assessed technical rigor for evaluation activities at OPM as compared to the federal government. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to an item about evaluation rigor.

| Item | OPM | Federal Government |

|---|---|---|

| Evaluations were technically rigorous (i.e., they produced accurate, valid, and high-quality evidence) | 24.3% | 32.1% |

OPM performed lower than government overall, in part because there were more who did not respond or indicated that there was no basis to judge. However, this is consistent with OPM’s own internal audit suggesting that there are few evaluation activities happening and most are not rigorous.

OPM’s internal survey asked respondents to assess the extent to which their office’s evidence activities were technically rigorous and used the appropriate methods, tools, and techniques to address the need.

To what extent evidence activities are technically rigorous Image Details

Overall, OPM managers, supervisors and senior leaders reported a lower extent of technical rigor for evidence activities; with the exception of analysis, fewer than 50 percent of the respondents rated the evidence activities as being rigorous to a very great or great extent.

The office-level findings showing perceived rigor for each evidence activity are included in Annex A.

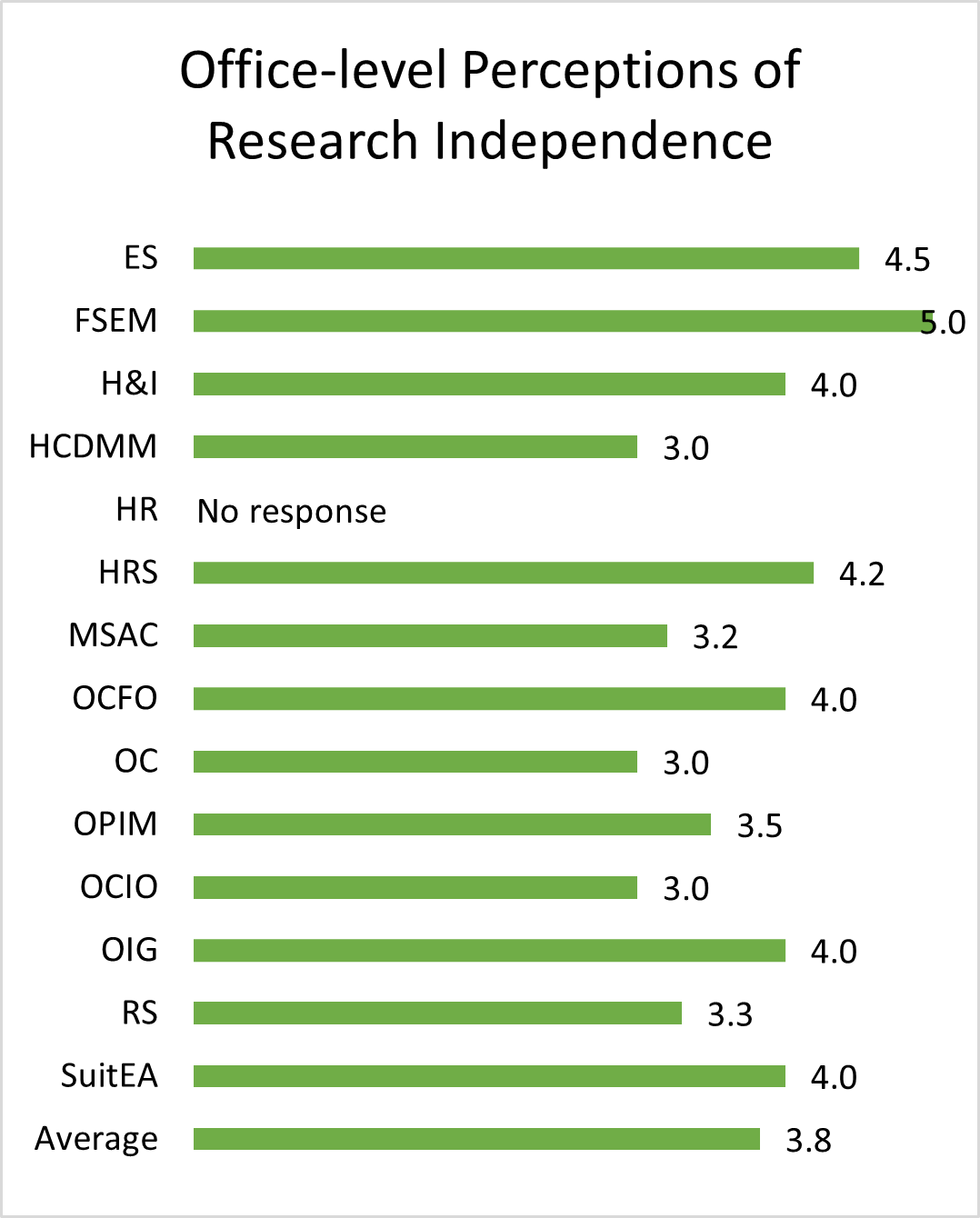

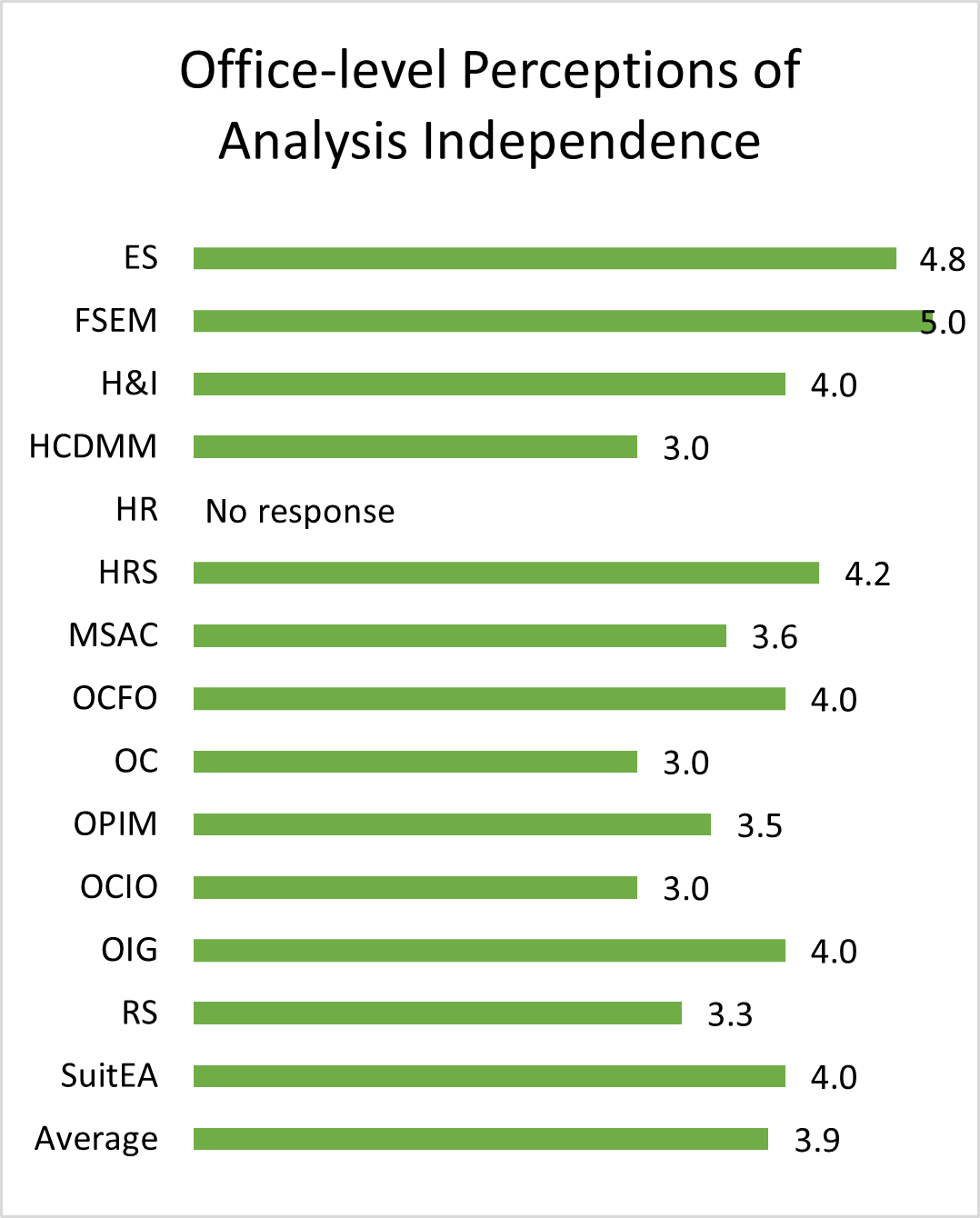

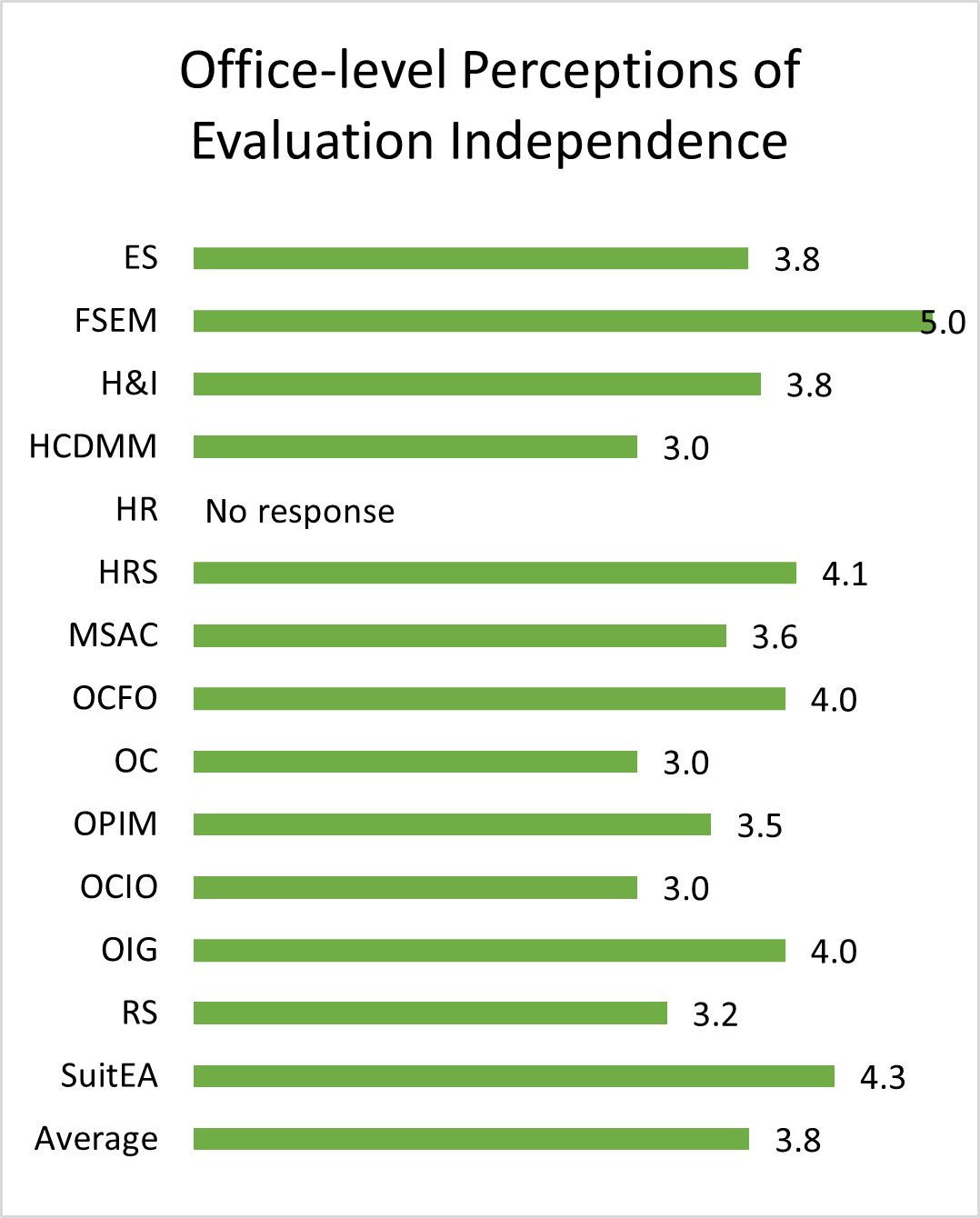

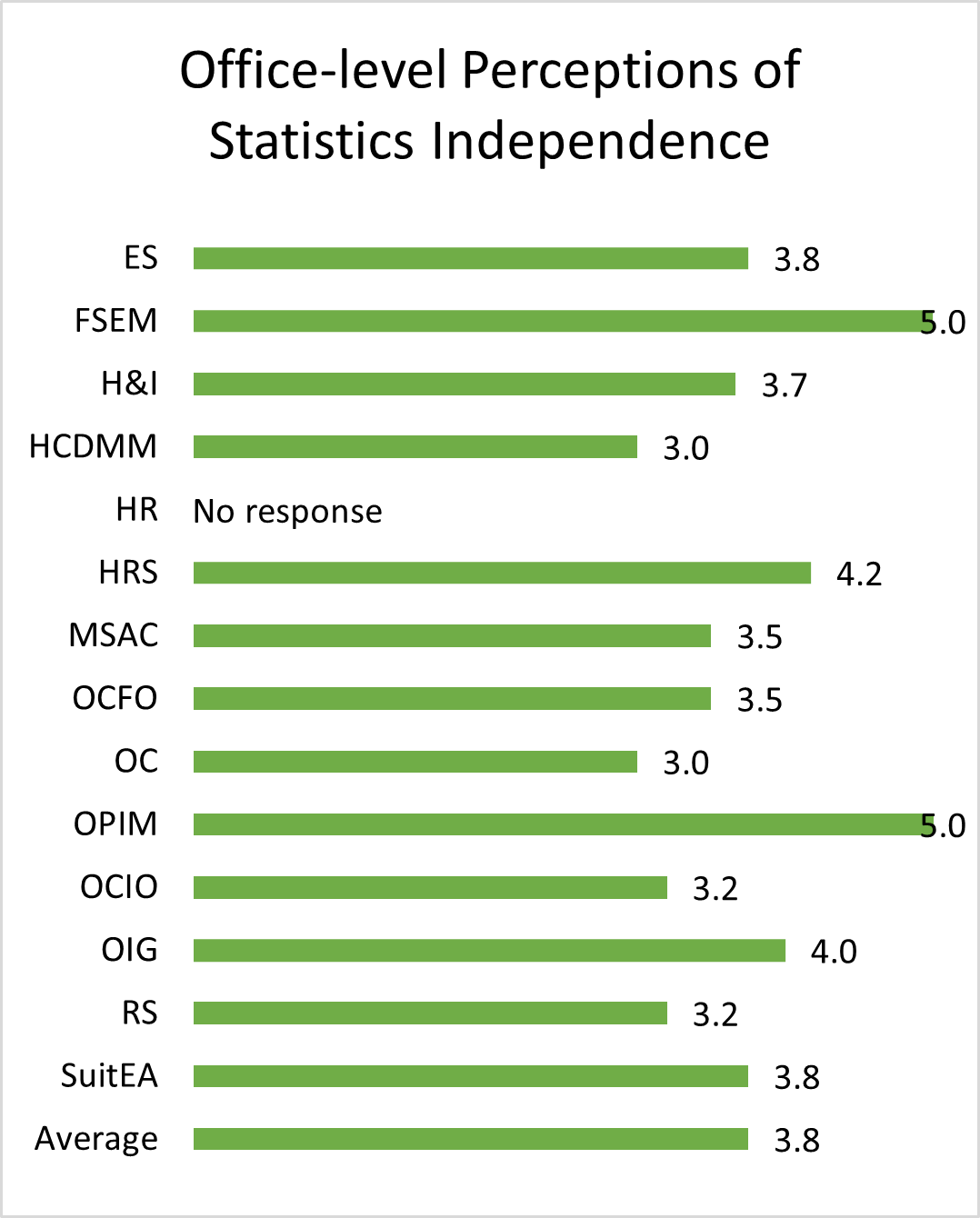

Independence of evidence activities

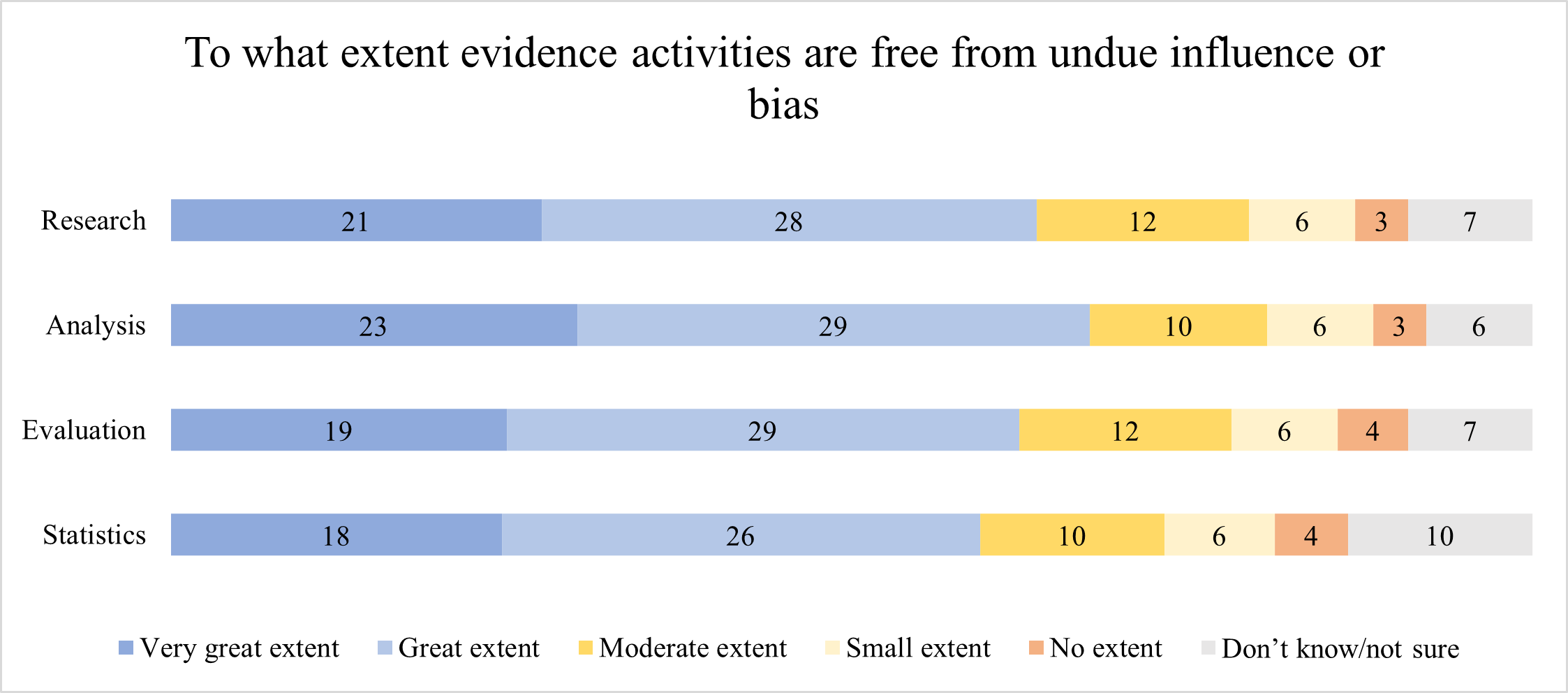

OPM’s evidence activities are generally conducted for internal audiences and program improvement and thus may be less susceptible to external stakeholder influence or bias; however, intra-agency influence and bias may present an issue. To assess perceptions of independence of evidence activities, OPM leveraged data from GAO’s Managing for Results survey to examine undue influence in evaluations and OPM’s internal survey to examine undue influence in all evidence types.

The GAO survey assessed whether evaluation activities were completed without undue influence at OPM as compared to the federal government. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to an item about undue influence.

| Item | OPM | Federal Government |

|---|---|---|

| Evaluations were completed without undue influence | 29.0% | 35.5% |

OPM performed lower than government overall, in part because there were more who did not respond or indicated that there was no basis to judge. However, this is consistent with OPM’s own internal audit suggesting that there are few evaluation activities happening and most are not rigorous.

OPM’s internal survey asked respondents to assess the extent to which their office’s evidence activities were free from bias or undue influence.

To what extent evidence activities are free from undue influence or bias Image Details

Most OPM managers, supervisors and senior leaders reported that evidence activities were independent, which is to say free from undue influence or bias, though there are possibilities to further strengthen the independence of OPM’s evidence activities.

The office-level findings showing perceived independence for each evidence activity are included in Annex A.

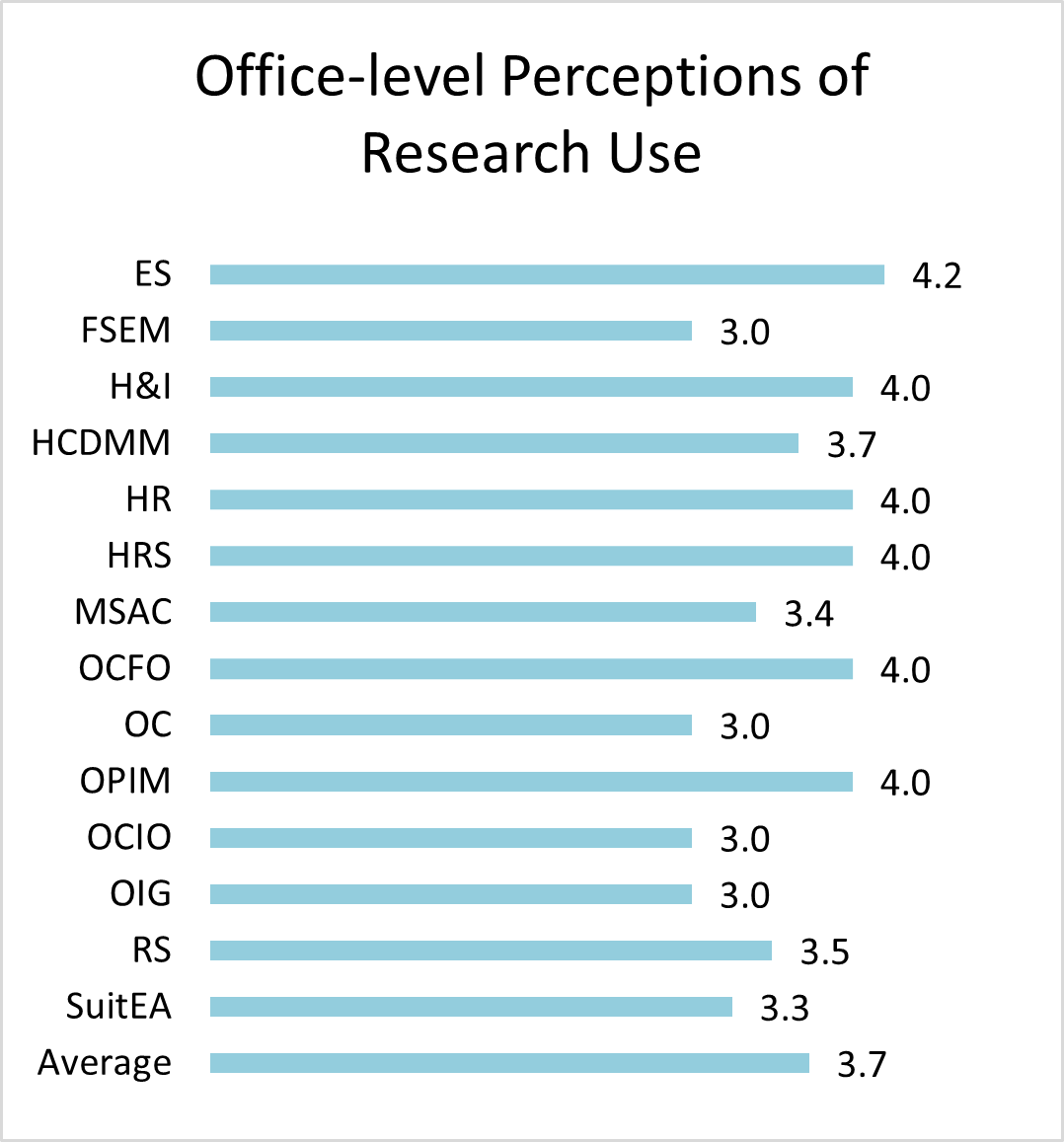

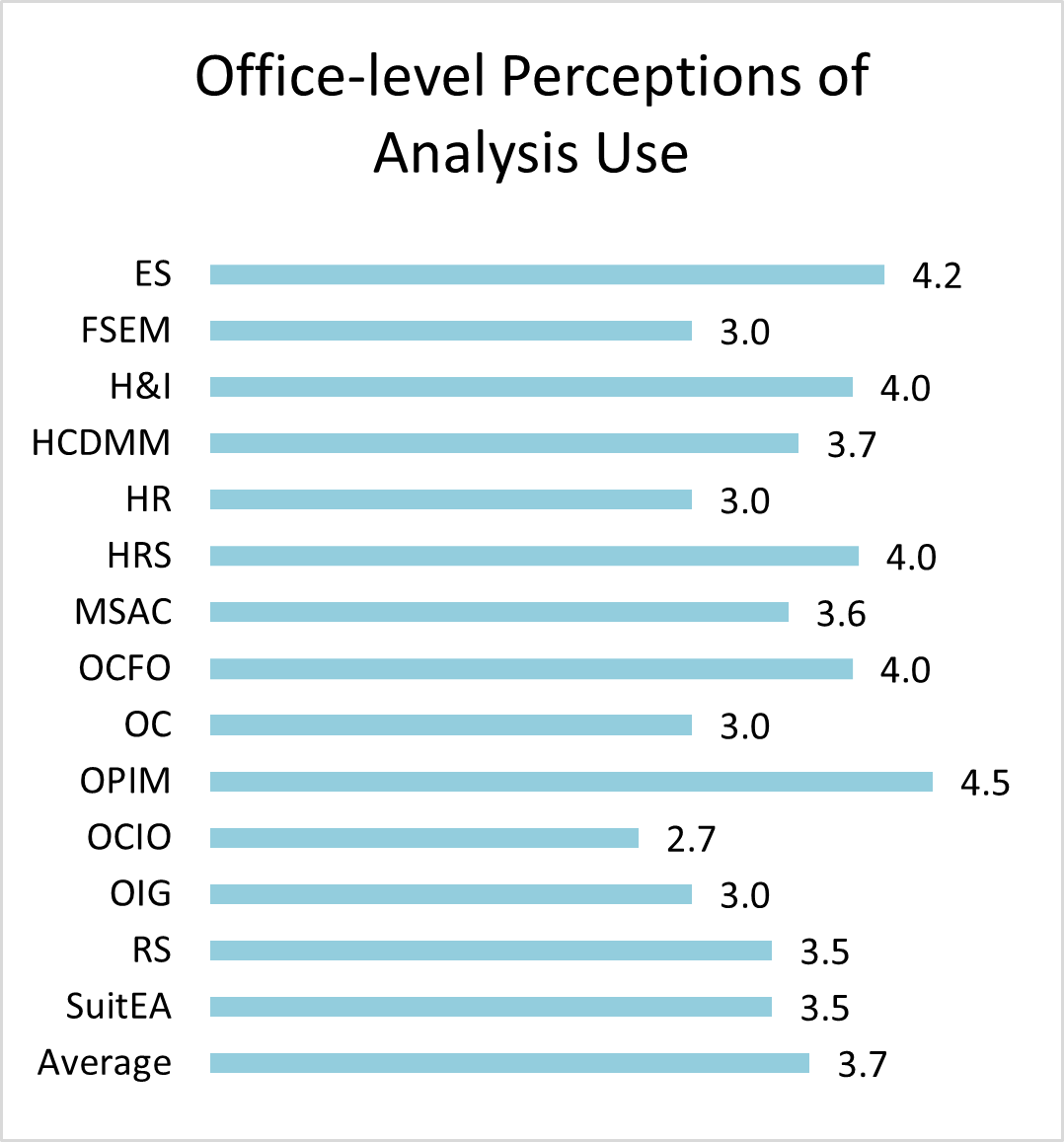

Agency use of data and evidence

OCFO’s Planning, Performance, and Evaluation Group manages quarterly data-driven organizational performance review meetings (Results OPM meetings) where agency leadership reviews performance results (via agency dashboards) and assesses progress toward the agency’s strategic goals. OPM plans to use existing agency-wide management processes, such as the Results OPM meetings, to promote the timely communication of results from evidence-building activities to inform decisions, improve policies and services, and support the agency in the achievement of its strategic goals.

OPM’s Planning, Performance, and Evaluation Group currently incorporates evidence-building activities (such as detailed analyses focused on a particular topic) into the Results OPM meetings and plans to incorporate other types of evidence (such as program evaluation results) to provide agency leadership with additional data on what is working, what is not, and what the agency needs to do to improve to achieve its strategic goals. The team will also leverage these meetings to identify evidence gaps and needs to inform evidence activities and capacity building needs.

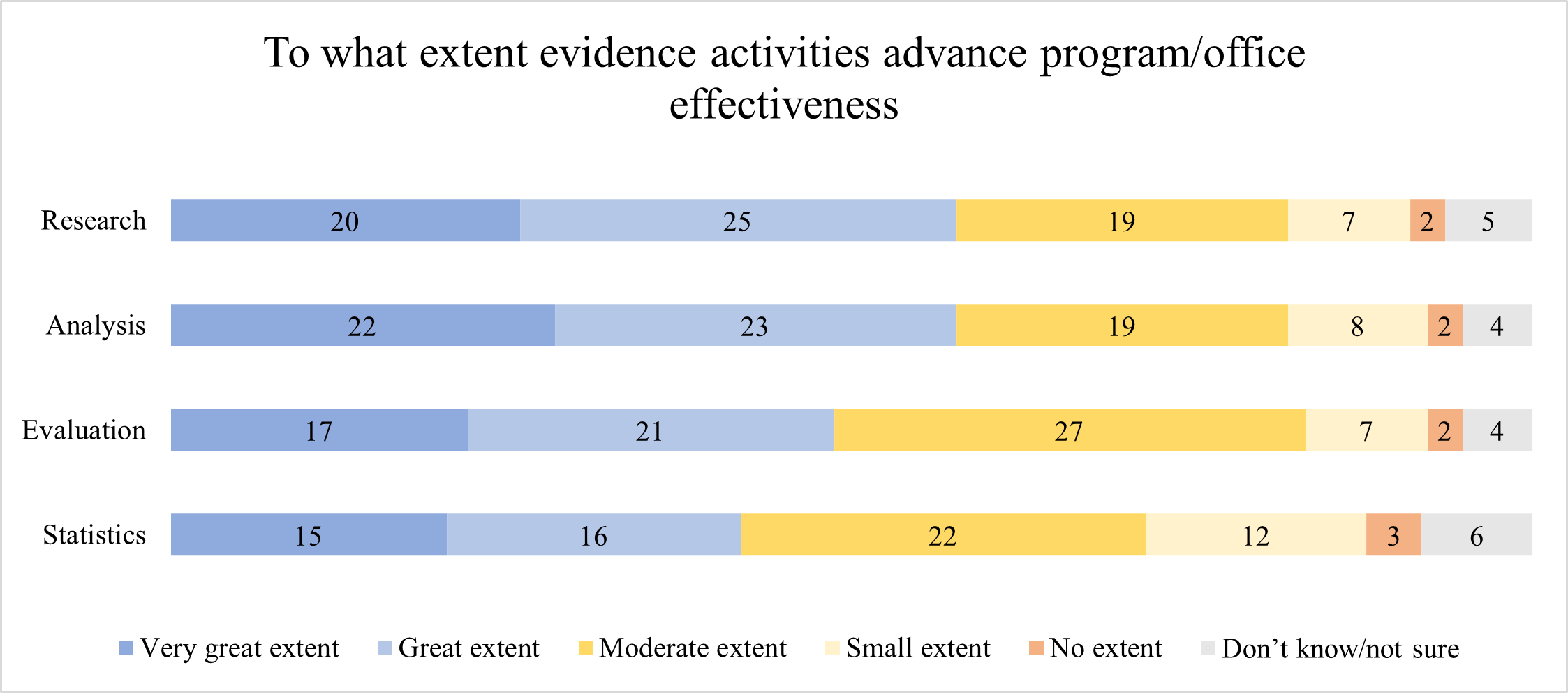

To examine agency perceptions of the effectiveness of its evidence activities in meeting the intended outcomes, OPM will leverage the GAO survey results as a baseline for evidence use.

The GAO survey assessed extent of use of evaluations to inform programs at OPM as compared to the federal government. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding evaluation use.

| Item | OPM | Federal Government |

|---|---|---|

| To what extent does your program(s) use results from evaluations for the following purposes? Implementing changes to improve program performance | 29.7% | 37.8% |

| To what extent does your program(s) use results from evaluations for the following purposes? Adopting new program approaches, operations, or processes | 29.7% | 37.0% |

| To what extent does your program(s) use results from evaluations for the following purposes? Sharing effective program approaches or lessons learned | 33.4% | 37.4% |

| To what extent does your program(s) use results from evaluations for the following purposes? Allocating resources within the program | 29.2% | 30.9% |

| To what extent does your program(s) use results from evaluations for the following purposes? Explaining or providing context for performance results | 30.8% | 36.1% |

| To what extent does your program(s) use results from evaluations for the following purposes? Informing the public about the program’s performance, as appropriate | 22.0% | 19.7% |

On all items except one, OPM underperformed the government in terms of use of evaluations. However, for both OPM and the government, missing/non-response was around 40 percent, suggesting that many individuals reported not conducting evaluations and thus were exempted from the question. The findings thus suggest room for improvement in both uptake of evaluations and use of their results.

The GAO survey also assessed extent of use of information beyond performance improvement and evaluation, specifically administrative data, statistical data, and research and analysis. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding use of this information.

| Item | OPM | Federal Government |

|---|---|---|

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Providing context about the problem the program is designed to address | 43.3% | 31.7% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Setting or revising program priorities and goals | 39.0% | 31.9% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Developing program strategy | 39.6% | 33.5% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Explaining or providing context for performance results | 42.6% | 32.6% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Refining program performance measures | 37.8% | 29.4% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Allocating resources | 30.4% | 28.9% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Identifying opportunities to improve program performance | 39.5% | 33.7% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Identifying and sharing effective program approaches with others | 31.1% | 29.9% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Adopting new program approaches or changing work processes | 33.9% | 30.6% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Identifying opportunities to reduce, eliminate, or better manage duplicative activities | 30.7% | 30.1% |

| To what extent, if at all, do you use any of those types of information when participating in the following activities for the program(s) you are involved with? Coordinating program efforts within your agency or with other external entities | 29.9% | 27.4% |

On all items OPM exceeded the government performance in terms of use of administrative data, statistical data, and research and analysis. For OPM and the government, missing/non-response was around 40-45 percent, suggesting that many individuals reported not using other data and thus were exempted from the question. Nonetheless, OPM showed strong performance in using these results to inform program development and strategy, understand program performance, and identify opportunities to improve. OPM shows room for improvement in managing resources based on this information and using it to share or coordinate with others.

OPM’s internal survey was employed to examine additional perceptions of the effectiveness of OPM’s evidence activities in informing programs, asking respondents the extent to which evidence activities advance the program/office effectiveness.

To what extent evidence activities advance program/office effectiveness Image Details

OPM managers, supervisors, and senior leaders had mixed responses about the extent to which evidence is used for their program or office, with research and analysis used to the greatest extent to advance effectiveness.

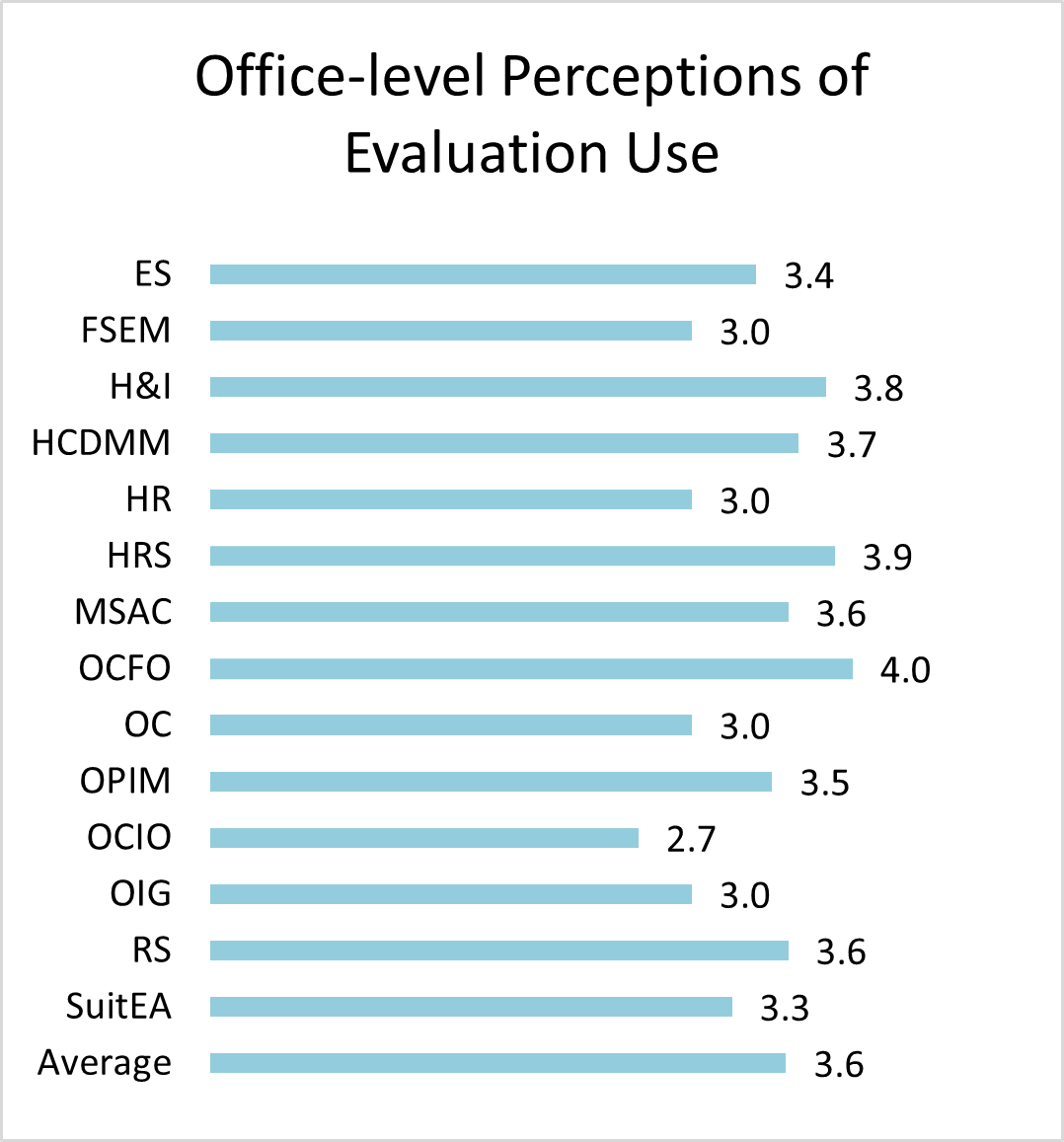

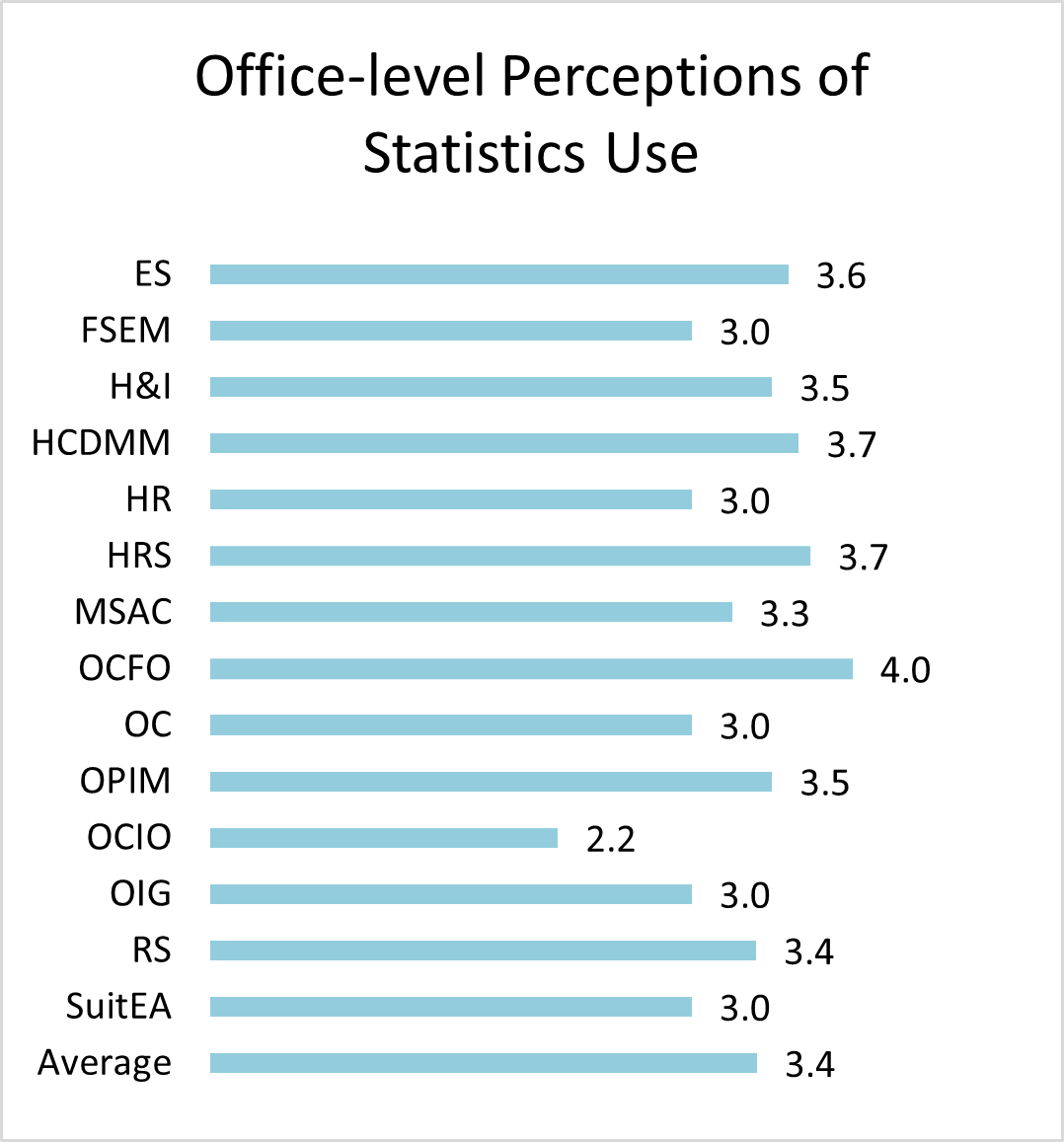

The office-level findings showing perceived use of each evidence activity are included in Annex A.

Overall, the findings suggest that there is a continued need to expand adoption of evidence activities and to emphasize the use of evidence results, particularly for statistics and evaluation and in managing resources and collaboration.

OPM has established partnerships and processes to plan, disseminate, and use priority evidence-building activities (such as research and analysis and/or evaluations that are in the agency’s learning agenda[45]) to inform decisions and improve policies and services.

Organizational capacity

OPM Research Steering Committee

OPM has established a research steering committee comprised of senior executives and managers from across the agency, including the Chief Data Officer and the Statistical Official. The steering committee provides strategic guidance and direction on the development of the agency’s learning agenda. The committee identifies the knowledge gaps that inform the learning agenda, proposes draft research questions, and prioritizes and approves initial research questions for the learning agenda and annual evaluation plan[46]. The Committee is co-chaired by the Evaluation Officer and a senior executive or leader appointed by the Director or Chief Management Officer.

OPM Research Work Group

OPM has established a work group comprised of subject matter experts in research, evaluation, and statistics from across the agency, who may advise on (1) learning agenda research question refinement; (2) data, tools, methods, and/or analytic approaches to answer research questions; and (3) other evaluation and evidence-building activities. The group also will meet to exchange results of research and evaluation studies and to engage in capacity building activities. The group is chaired by the Evaluation Officer or their designee.

Data Governance Board

OPM has established a Data Governance Board comprised of senior-level staff from programmatic, data, and mission support offices, including the Evaluation Officer and the Statistical Official. The board provides guidance and direction on data strategy, management, and use. The board also develops and maintains the data inventory and manages open access to data. The board is chaired by the Chief Data Officer.

Leadership support

To assess leadership support for evidence activities and evaluation, OPM will use the GAO survey results as a baseline. The Managing for Results survey asked about commitment of top leadership to using evaluations and additional types of information (administrative and statistical data and research and analysis). Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding leadership support for evidence activities.

| Item | OPM | Federal Government |

|---|---|---|

| Agency top leadership was committed to using evaluations | 20.7% | 29.0% |

| To what extent, if at all, do you agree with the following statements about additional types of information (i.e., administrative, and statistical data, and research and analysis)? My agency’s top leadership demonstrates a strong commitment to using a variety of data and information in decision making (including performance information, program evaluations, and additional types of information) | 25.6% | 44.3% |

OPM managers reported lower levels of top leadership support for evaluation use and other types of information than government as a whole. However, these results should be considered in the context of changing administrations; top leadership may include politically appointed staff who are no longer at OPM.

Agency resources

To assess perceptions of access to key evidence resources, OPM will use the GAO survey results regarding access to evidence tools and resources.

The Managing for Results survey asked about availability of a variety of evidence-related tools and resources. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding evidence tools and resources.

| Item | OPM | Federal Government |

|---|---|---|

| I have access to the analytic tools needed to collect, analyze, and use performance information | 21.4% | 25.8% |

| To what extent, if at all, do you agree with the following statements about additional types of information (i.e., administrative, and statistical data, and research and analysis)? I have access to the analytical tools needed to collect, analyze, and use these additional types information | 22.8% | 39.3% |

| To what extent, if at all, do you agree with the following statements about additional types of information (i.e., administrative, and statistical data, and research and analysis)? My agency is investing resources to improve its capacity to collect, analyze, and use these additional types of information | 18.3% | 32.1% |

| To what extent, if at all, do you agree with the following statements about additional types of information (i.e., administrative, and statistical data, and research and analysis)? My agency has information systems and processes in place to protect the privacy and security of its data | 48.0% | 56.0% |

OPM managers reported a lower extent of access to resources for data than government as a whole. These findings suggest areas for improvement in investment in resources, particularly for collection and analysis of data.

Provision of training

OPM provides specific evidence trainings, such as use of the data visualization software, which are ongoing. Additionally, OPM intends to launch and employ a researcher and evaluator community of practice, which will include a capacity building component.

To assess the current perceptions of training provision, OPM will use the GAO survey as a baseline. The Managing for Results survey asked about whether training on a variety of evidence skills were offered to staff.

Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding evaluation training.

| Item | OPM | Federal Government |

|---|---|---|

| Program staff receive training in program evaluation (e.g., formal classroom training, conferences, on the job training) | 22.3% | 25.3% |

Below are the results showing the percentage of managers at OPM and government-wide who responded “yes” that a particular type of training was offered to them in the past three years.

| Item | OPM | Federal Government |

|---|---|---|

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Assess the quality of performance data | 43.3% | 52.6% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Use program performance information to make decisions | 44.7% | 56.8% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Identify and collect additional types of information, such as administrative or statistical data, or research and analysis | 26.8% | 30.7% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Assess the quality of data, such as administrative or statistical data | 25.5% | 30.4% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Assess the credibility of research, data, and analysis | 21.9% | 27.2% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Analyze administrative and statistical data, and research and analysis to draw conclusions or inform decisions | 28.1% | 30.8% |

| During the past 3 years, has your agency provided, arranged, or paid for training that would help you to accomplish the following tasks? Better understand how to choose the right type(s) of information for different kinds of decisions | 26.3 | 30.5% |

OPM managers reported lower provision of training than government as a whole in all evidence domains. While nearly half reported receiving training around performance management, self-reported receipt of training in all other areas was low, indicating an opportunity to expand access to evidence-related training within OPM.

OPM intends to focus on improving staff capacity in its FY 2022-2026 strategic plan through provision of training, and hopes to leverage data, research, and evaluation communities to expand training.

Staff capacity

As of FY 2021, OPM offices identified 334 staff that spend at least 25 percent of their time on research and/or analysis, 12 that spend 25 percent or more of their time on statistical work, and 4 that spend 25 percent or more of their time doing evaluation work.

OPM’s FY 2022 Congressional Budget Justification includes funding for a full-time Chief Data Officer as well as two additional central research and evaluation staff in the OCFO Planning, Performance, and Evaluation Group.

OPM offices identified staff with some key, specific skills (for example, survey analysis, regression analysis, data science, customer experience research, policy analysis, impact evaluation). OPM identified staff skilled in data-related software, including proficiency in SAS, SPSS, R, TOAD, Tableau and PowerBI.

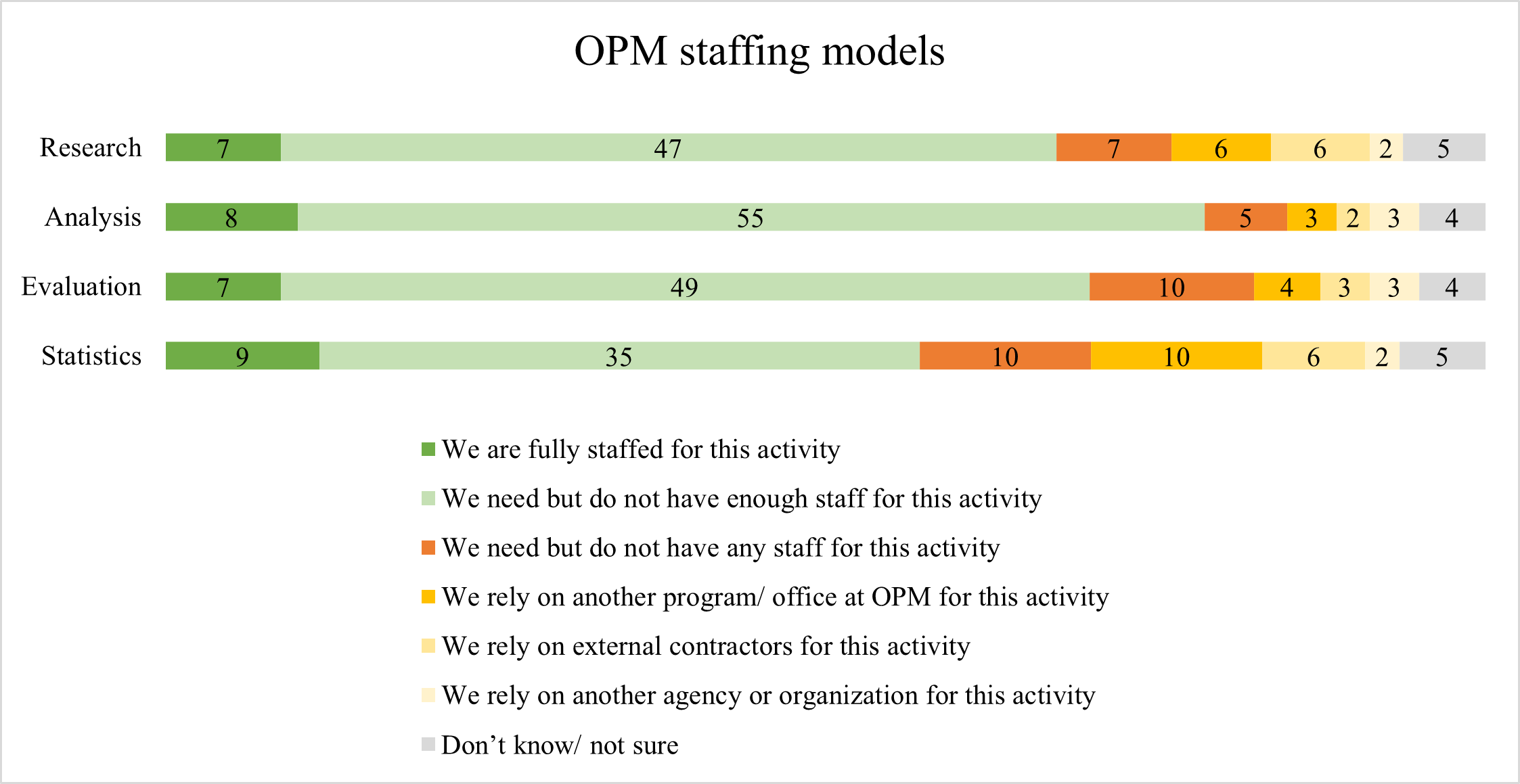

To better understand evidence staffing and capacity, OPM’s internal survey asked about staffing models for evidence and for those offices with staff, about their capacity to conduct evidence activities.

OPM managers, supervisors, and senior leaders were asked to describe their staffing model for each evidence activity, including options for internal and external staffing.

OPM staffing models Image Details

For all evidence activities, the most common response was that program offices needed but did not have enough staff to accomplish evidence activities; the greatest perceived needs were for analysis and evaluation staffing.

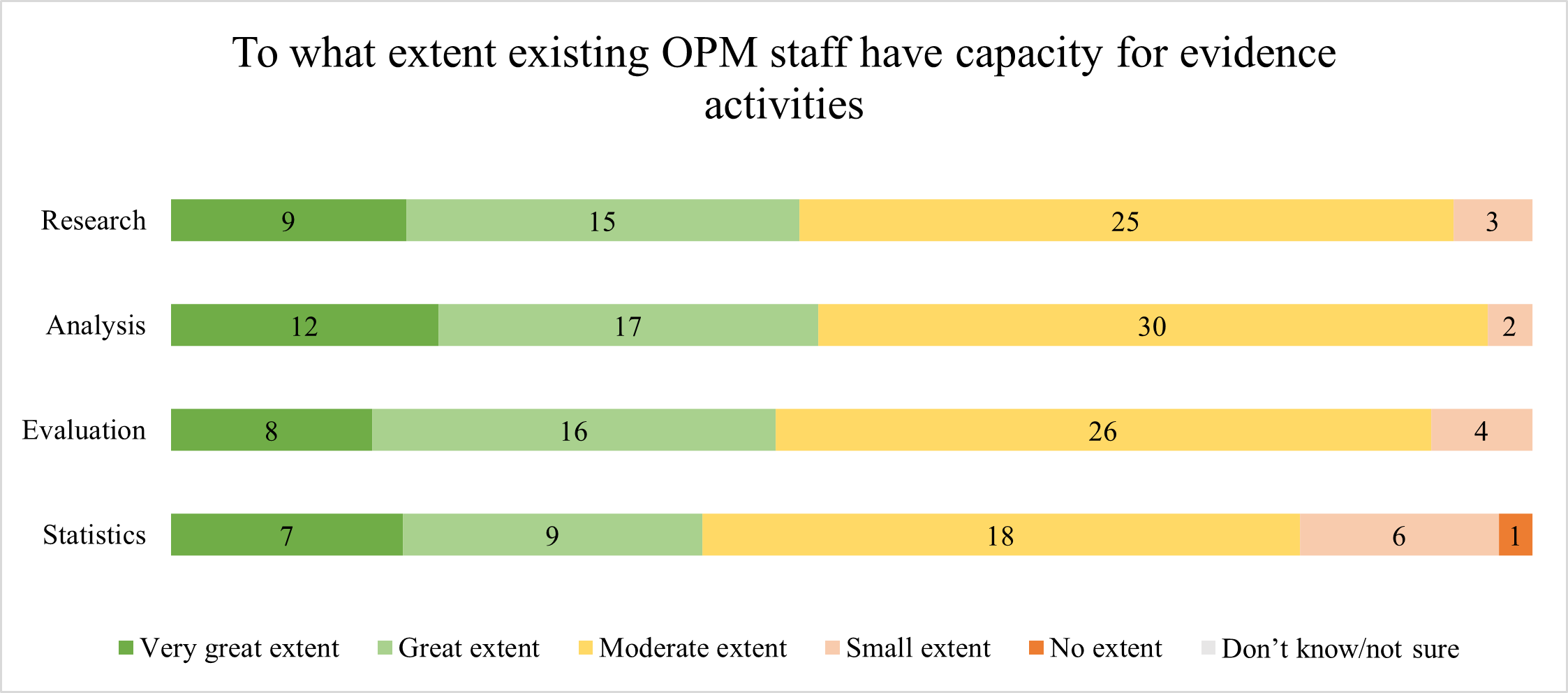

For those offices with staffing, respondents were asked to assess the extent of staff capacity for evidence activities.

To what extent OPM staff have capacity for evidence activities Image Details

Assessments of staff capacity varied, but about half of respondents rated their evidence staff’s capacity extent as “moderate.”

To assess perceptions of staff data skills, OPM additionally utilized the GAO survey’s results as baseline. The Managing for Results survey includes questions about managers’ appraisals of various staff skills. Below are the results showing the percentage of managers at OPM and government-wide who responded “great extent” or “very great extent” (the top two Likert scale options) in response to items regarding staff capacity.

| Item | OPM | Federal Government |

|---|---|---|

| For the program(s) that you are involved with, to what extent, if at all, do you agree with the following statements? Staff involved in the program(s) collectively have the knowledge and skills needed to collect, analyze, and use performance information | 42.3% | 50.5% |

| To what extent do you agree with the following statements about staff collectively in your programs? Program staff have the skills necessary to conduct program evaluations | 30.3% | 32.7% |

| To what extent do you agree with the following statements about staff collectively in your programs? Program staff have the skills necessary to understand program evaluation methods, results, and limitations | 32.4% | 33.0% |

| To what extent do you agree with the following statements about staff collectively in your programs? Program staff have the skills necessary to implement evaluation recommendations | 39.3% | 35.3% |

| To what extent, if at all, do you agree with the following statements about additional types of information (i.e., administrative, and statistical data, and research and analysis)? Program staff collectively have the knowledge and skills needed to collect, analyze, and use these additional types of information | 30.0% | 27.0% |

OPM underperformed the government in several domains related to staff skills and outperformed in one domain. The results show similar patterns as to those shown in OPM’s internal survey, indicating a need for greater knowledge and skills among staff to conduct all types of evidence activities.

To gain even more granular information on staff capacity, OPM will implement the Competency Exploration Development and Readiness (CEDAR) tool, which measures employee perceptions of their skill level in key competencies as well as their supervisor’s appraisal of their skills. OPM is developing an evidence- and data science-specific set of competencies, and this tool will be deployed to staff working on evidence generation activities to assess their skills. These findings will be used to identify and target specific staff capacity-building activities.

All Likert-scaled responses have been converted to numeric scores (1-5, no extent-very great extent) and averaged for ease of comparison between offices. Non-response or not applicable were removed from the calculations.

Responses by OPM office Image Details

Office-level Need and Coverage

Office-level Research Need and Coverage Image Details

Office-level Analysis Need and Coverage Image Details

Office-level Evaluation Need and Coverage Image Details

Office-level Statistic Need and Coverage Image Details

Office-level Perceptions of Quality

Office-level Perceptions of Research Quality Image Details

Office-level Perceptions of Analysis Quality Image Details

Office-level Perceptions of Evaluation Quality Image Details

Office-level Perceptions of Statistics Quality Image Details

Office-level Perceptions of Rigor

Office-level Perceptions of Research Rigor Image Details

Office-level Perceptions of Analysis Rigor Image Details

Office-level Perceptions of Evaluation Rigor Image Details

Office-level Perceptions of Statistics Rigor Image Details

Office-level Perceptions of Independence

Office-level Perceptions of Research Independence Image Details

Office-level Perceptions of Analysis Independence Image Details

Office-level Perceptions of Evaluation Independence Image Details

Office-level Perceptions of Statistics Independence Image Details

Office-level Perceptions of Use

Office-level Perceptions of Research Use Image Details

Office-level Perceptions of Analysis Use Image Details

Office-level Perceptions of Evaluation Use Image Details

Footnote 42

See 5 U.S.C. § 313, “Evaluation Officers”

Footnote 43

Pub. L. No. 115-435, 132 Stat. 5529, § 315

Footnote 44

OMB Circular No. A-11, Preparation, Submission, and Execution of the Budget (June 20I9), Part 6.

Footnote 45

The learning agenda identifies the key questions the agency wants to answer to improve operational and programmatic outcomes and develop appropriate policies and regulations to support mission accomplishment. It provides an evidence-building roadmap to support effective and efficient agency functioning.

Footnote 46

In accordance with OMB Circular A-11 Part 6 Section 290, OPM annually prepares a plan describing its evaluation activities for the subsequent year, including the key questions for each planned “significant” evaluation study, as well as they key information collections or acquisitions for research support the agency plans to begin. It is published along with the Annual Performance Plan.

Types of Evidence Image Details

Policy Analysis: Analysis of data, such as general purpose survey or program-specific data, to generate and inform policy, e.g., estimating regulatory impacts and other relevant effects

Program Evaluation: Systematic analysis of a program, policy, organization, or component of these to assess effectiveness and efficiency

Foundational Fact Finding: Foundational research and analysis such as aggregate indicators, exploratory studies, descriptive statistics, and basic research

Performance Measurement: Ongoing, systematic tracking of information relevant to policies, strategies, programs, projects, goals/objectives, and/or activities

Types of Evidence Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 35 | 44 | 35 | 30 |

| Great extent | 26 | 26 | 28 | 23 |

| Moderate extent | 18 | 10 | 14 | 17 |

| Small extent | 1 | 0 | 3 | 8 |

| No extent (this is not relevant for my program/ office) | 3 | 2 | 2 | 3 |

| Don’t know/ not sure | 2 | 2 | 2 | 2 |

Types of Evidence Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 24 | 23 | 18 | 14 |

| Great extent | 26 | 23 | 21 | 25 |

| Moderate extent | 17 | 23 | 25 | 20 |

| Small extent | 8 | 9 | 12 | 13 |

| No extent (this is not relevant for my program/ office) | 0 | 0 | 0 | 1 |

| Don’t know/not sure | 5 | 4 | 4 | 6 |

To what extent evidence results are quality Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 18 | 17 | 13 | 7 |

| Great extent | 24 | 30 | 24 | 19 |

| Moderate extent | 25 | 21 | 25 | 30 |

| Small extent | 4 | 3 | 7 | 10 |

| No extent | 1 | 2 | 2 | 2 |

| Don’t know/not sure | 6 | 5 | 6 | 8 |

| N/A this is not relevant for my program/ office | 2 | 2 | 2 | 4 |

To what extent evidence activities are technically rigorous Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 12 | 12 | 11 | 9 |

| Great extent | 21 | 29 | 26 | 20 |

| Moderate extent | 24 | 19 | 19 | 21 |

| Small extent | 15 | 13 | 15 | 15 |

| No extent | 2 | 3 | 4 | 5 |

| Don’t know/not sure | 5 | 3 | 4 | 8 |

| N/A this is not relevant for my program/ office | 2 | 2 | 2 | 3 |

To what extent evidence activities are free from undue influence or bias Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 21 | 23 | 19 | 18 |

| Great extent | 28 | 29 | 29 | 26 |

| Moderate extent | 12 | 10 | 12 | 10 |

| Small extent | 6 | 6 | 6 | 6 |

| No extent | 3 | 3 | 4 | 4 |

| Don’t know/not sure | 7 | 6 | 7 | 10 |

| N/A this is not relevant for my program/ office | 3 | 3 | 3 | 5 |

To what extent evidence activities advance program/office effectiveness Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 20 | 22 | 17 | 15 |

| Great extent | 25 | 23 | 21 | 16 |

| Moderate extent | 19 | 19 | 27 | 22 |

| Small extent | 7 | 8 | 7 | 12 |

| No extent | 2 | 2 | 2 | 3 |

| Don’t know/not sure | 5 | 4 | 4 | 6 |

| N/A this is not relevant for my program/ office | 1 | 1 | 1 | 4 |

OPM staffing models Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| We are fully staffed for this activity | 7 | 8 | 7 | 9 |

| We need but do not have enough staff for this activity | 47 | 55 | 49 | 35 |

| We need but do not have any staff for this activity | 7 | 5 | 10 | 10 |

| We rely on another program/ office at OPM for this activity | 6 | 3 | 4 | 10 |

| We rely on external contractors for this activity | 6 | 2 | 3 | 6 |

| We rely on another agency or organization for this activity | 2 | 3 | 3 | 2 |

| Don’t know/ not sure | 5 | 4 | 4 | 5 |

To what extent OPM staff have capacity for evidence activities Image Details

| Research | Analysis | Evaluation | Statistics | |

|---|---|---|---|---|

| Very great extent | 9 | 12 | 8 | 7 |

| Great extent | 15 | 17 | 16 | 9 |

| Moderate extent | 25 | 30 | 26 | 18 |

| Small extent | 3 | 2 | 4 | 6 |

| No extent | 0 | 0 | 0 | 1 |

| Don’t know/not sure | 0 | 0 | 0 | 0 |

| N/A this is not relevant for my program/ office | 0 | 0 | 0 | 1 |

Responses by OPM office Image Details

| Office | Number of respondents | |

|---|---|---|

| OGC (7) | 1 | 14% |

| OD (12) | 1 | 8% |

| OPIM (3) | 2 | 67% |

| OC (3) | 3 | 100% |

| OIG (29) | 3 | 10% |

| FSEM (15) | 4 | 27% |

| HR (5) | 4 | 80% |

| Other | 4 | |

| SuitEA (6) | 4 | 67% |

| HCDMM (8) | 5 | 63% |

| OCFO (19) | 6 | 32% |

| H&I (26) | 7 | 27% |

| MSAC (16) | 7 | 44% |

| ES (25) | 8 | 32% |

| OCIO (38) | 15 | 39% |

| RS (81) | 23 | 28% |

| HRS (58) | 35 | 60% |

Office-level Research Need and Coverage Image Details

| Research need | Research coverage | |

|---|---|---|

| H&I | 4.7 | 3.4 |

| OCFO | 4.3 | 3.3 |

| MSAC | 4.4 | 3.8 |

| OC | 3.5 | 3.0 |

| RS | 4.2 | 3.7 |

| HRS | 4.2 | 3.8 |

| ES | 3.8 | 3.5 |

| Average | 4.1 | 3.8 |

| FSEM | 4.0 | 4.0 |

| HR | 3.0 | 3.0 |

| OIG | 3.0 | 3.0 |

| SuitEA | 4.8 | 4.8 |

| OPIM | 4.0 | 4.5 |

| HCDMM | 4.3 | 5.0 |

| OCIO | 3.3 | 4.0 |

Office-level Analysis Need and Coverage Image Details

| Analysis need | Analysis coverage | |

|---|---|---|

| MSAC | 4.8 | 3.8 |

| OCFO | 4.3 | |

| H&I | 4.7 | 3.9 |

| SuitEA | 4.8 | 4.0 |

| HRS | 4.5 | 3.9 |

| Average | 4.3 | 3.8 |

| RS | 4.2 | 3.6 |

| ES | 4.0 | 3.5 |

| OC | 3.5 | 3.0 |

| OIG | 3.5 | 3.0 |

| HCDMM | 5.0 | 4.7 |

| OCIO | 3.7 | 3.5 |

| FSEM | 4.0 | 4.0 |

| HR | 3.0 | 3.0 |

| OPIM | 4.5 | 5.0 |

Office-level Evaluation Need and Coverage Image Details

| Evaluation need | Evaluation coverage | |

|---|---|---|

| H&I | 4.6 | 3.6 |

| SuitEA | 4.3 | 3.3 |

| OCFO | 4.3 | 3.3 |

| ES | 3.8 | 3.0 |

| HRS | 4.4 | 3.7 |

| MSAC | 4.2 | 3.6 |

| Average | 4.1 | 3.5 |

| RS | 3.9 | 3.5 |

| OCIO | 3.7 | 3.5 |

| FSEM | 4.0 | 4.0 |

| HCDMM | 4.3 | 4.3 |

| HR | 3.0 | 3.0 |

| OC | 3.0 | 3.0 |

| OPIM | 4.0 | 4.0 |

| OIG | 3.0 | 3.0 |

Office-level Statistic Need and Coverage Image Details

| Statistics need | Statistics coverage | |

|---|---|---|

| H&I | 4.4 | 3.1 |

| HCDMM | 5.0 | 4.0 |

| RS | 4.1 | 3.4 |

| OCFO | 4.0 | 3.3 |

| Average | 3.9 | 3.5 |

| OCIO | 3.9 | 3.5 |

| ES | 3.8 | 3.5 |

| MSAC | 3.8 | 3.5 |

| HRS | 3.8 | 3.6 |

| FSEM | 4.0 | 4.0 |

| OPIM | 4.0 | 4.0 |

| OIG | 3.0 | 3.0 |

| SuitEA | 3.3 | 3.5 |

| OC | 2.5 | 3.0 |

| HR | 1.0 | 2.0 |

Office-level Perceptions of Research Quality Image Details

| Research | |

|---|---|

| ES | 4.3 |

| FSEM | 5.0 |

| H&I | 3.4 |

| HCDMM | 2.7 |

| HR | 5.0 |

| HRS | 4.0 |

| MSAC | 3.2 |

| OCFO | 3.7 |

| OC | 3.0 |

| OPIM | 4.0 |

| OCIO | 3.2 |

| OIG | 4.0 |

| RS | 3.6 |

| SuitEA | 4.0 |

| Average | 3.8 |

Office-level Perceptions of Analysis Quality Image Details

| Analysis | |

|---|---|

| ES | 4.5 |

| FSEM | 5.0 |

| H&I | 3.4 |

| HCDMM | 2.7 |

| HR | 3.0 |

| HRS | 4.1 |

| MSAC | 3.4 |

| OCFO | 3.7 |

| OC | 3.0 |

| OPIM | 4.0 |

| OCIO | 2.8 |

| OIG | 4.0 |

| RS | 3.6 |

| SuitEA | 4.5 |

| Average | 3.8 |

Office-level Perceptions of Evaluation Quality Image Details

| Evaluation | |

|---|---|

| ES | 3.5 |

| FSEM | 5.0 |

| H&I | 3.4 |

| HCDMM | 2.7 |

| HR | 3.0 |

| HRS | 3.8 |

| MSAC | 3.2 |

| OCFO | 3.7 |

| OC | 3.0 |

| OPIM | 5.0 |

| OCIO | 2.6 |

| OIG | 4.0 |

| RS | 3.5 |

| SuitEA | 4.0 |

| Average | 3.6 |

Office-level Perceptions of Statistics Quality Image Details

| Statistics | |

|---|---|

| ES | 3.4 |

| FSEM | 5.0 |

| H&I | 3.3 |

| HCDMM | 3.0 |

| HR | No response |

| HRS | 3.6 |

| MSAC | 2.8 |

| OCFO | 3.7 |

| OC | 3.0 |

| OPIM | 3.0 |

| OCIO | 2.6 |

| OIG | 4.0 |

| RS | 3.1 |

| SuitEA | 2.8 |

| Average | 3.3 |

Office-level Perceptions of Research Rigor Image Details

| Research | |

|---|---|

| ES | 4.2 |

| FSEM | 4.0 |

| H&I | 3.4 |

| HCDMM | 2.3 |

| HR | _ |

| HRS | 3.6 |

| MSAC | 2.8 |

| OCFO | 3.3 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 2.6 |

| OIG | 4.0 |

| RS | 3.3 |

| SuitEA | 3.3 |

| Average | 3.4 |

Office-level Perceptions of Analysis Rigor Image Details

| Analysis | |

|---|---|

| ES | 4.2 |

| FSEM | 4.0 |

| H&I | 3.6 |

| HCDMM | 2.3 |

| HR | _ |

| HRS | 3.9 |

| MSAC | 2.8 |

| OCFO | 3.3 |

| OC | 3.0 |

| OPIM | 3.0 |

| OCIO | 2.4 |

| OIG | 4.0 |

| RS | 3.3 |

| SuitEA | 3.3 |

| Average | 3.5 |

Office-level Perceptions of Evaluation Rigor Image Details

| Evaluation | |

|---|---|

| ES | 3.2 |

| FSEM | 4.0 |

| H&I | 3.6 |

| HCDMM | 2.3 |

| HR | _ |

| HRS | 3.7 |

| MSAC | 3.0 |

| OCFO | 3.3 |

| OC | 3.0 |

| OPIM | 4.0 |

| OCIO | 2.3 |

| OIG | 4.0 |

| RS | 3.3 |

| SuitEA | 3.3 |

| Average | 3.3 |

Office-level Perceptions of Statistics Rigor Image Details

| Statistics | |

|---|---|

| ES | 3.3 |

| FSEM | 4.0 |

| H&I | 3.6 |

| HCDMM | 2.7 |

| HR | _ |

| HRS | 3.5 |

| MSAC | 2.3 |

| OCFO | 3.3 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 2.4 |

| OIG | 4.0 |

| RS | 3.1 |

| SuitEA | 3.0 |

| Average | 3.2 |

Office-level Perceptions of Research Independence Image Details

| Research | |

|---|---|

| ES | 4.5 |

| FSEM | 5.0 |

| H&I | 4.0 |

| HCDMM | 3.0 |

| HR | _ |

| HRS | 4.2 |

| MSAC | 3.2 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 3.0 |

| OIG | 4.0 |

| RS | 3.3 |

| SuitEA | 4.0 |

| Average | 3.8 |

Office-level Perceptions of Analysis Independence Image Details

| Analysis | |

|---|---|

| ES | 4.8 |

| FSEM | 5.0 |

| H&I | 4.0 |

| HCDMM | 3.0 |

| HR | _ |

| HRS | 4.2 |

| MSAC | 3.6 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 3.0 |

| OIG | 4.0 |

| RS | 3.3 |

| SuitEA | 4.0 |

| Average | 3.9 |

Office-level Perceptions of Evaluation Independence Image Details

| Evaluation | |

|---|---|

| ES | 3.8 |

| FSEM | 5.0 |

| H&I | 3.8 |

| HCDMM | 3.0 |

| HR | _ |

| HRS | 4.1 |

| MSAC | 3.6 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 3.0 |

| OIG | 4.0 |

| RS | 3.2 |

| SuitEA | 4.3 |

| Average | 3.8 |

Office-level Perceptions of Statistics Independence Image Details

| Statistics | |

|---|---|

| ES | 3.8 |

| FSEM | 5.0 |

| H&I | 3.7 |

| HCDMM | 3.0 |

| HR | _ |

| HRS | 4.2 |

| MSAC | 3.5 |

| OCFO | 3.5 |

| OC | 3.0 |

| OPIM | 5.0 |

| OCIO | 3.2 |

| OIG | 4.0 |

| RS | 3.2 |

| SuitEA | 3.8 |

| Average | 3.8 |

Office-level Perceptions of Research Use Image Details

| Research | |

|---|---|

| ES | 4.2 |

| FSEM | 3.0 |

| H&I | 4.0 |

| HCDMM | 3.7 |

| HR | 4.0 |

| HRS | 4.0 |

| MSAC | 3.4 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 4.0 |

| OCIO | 3.0 |

| OIG | 3.0 |

| RS | 3.5 |

| SuitEA | 3.3 |

| Average | 3.7 |

Office-level Perceptions of Analysis Use Image Details

| Analysis | |

|---|---|

| ES | 4.2 |

| FSEM | 3.0 |

| H&I | 4.0 |

| HCDMM | 3.7 |

| HR | 3.0 |

| HRS | 4.0 |

| MSAC | 3.6 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 4.5 |

| OCIO | 2.7 |

| OIG | 3.0 |

| RS | 3.5 |

| SuitEA | 3.5 |

| Average | 3.7 |

Office-level Perceptions of Evaluation Use Image Details

| Evaluation | |

|---|---|

| ES | 3.4 |

| FSEM | 3.0 |

| H&I | 3.8 |

| HCDMM | 3.7 |

| HR | 3.0 |

| HRS | 3.9 |

| MSAC | 3.6 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 2.7 |

| OIG | 3.0 |

| RS | 3.6 |

| SuitEA | 3.3 |

| Average | 3.6 |

Office-level Perceptions of Statistics Use Image Details

| Statistics | |

|---|---|

| ES | 3.6 |

| FSEM | 3.0 |

| H&I | 3.5 |

| HCDMM | 3.7 |

| HR | 3.0 |

| HRS | 3.7 |

| MSAC | 3.3 |

| OCFO | 4.0 |

| OC | 3.0 |

| OPIM | 3.5 |

| OCIO | 2.2 |

| OIG | 3.0 |

| RS | 3.4 |

| SuitEA | 3.0 |

| Average | 3.4 |